Integración de la Inteligencia Artificial (IA) en la Educación Superior Rural Filipina: Perspectivas, desafíos y consideraciones éticas

Artificial Intelligence (AI) integration in Rural Philippine Higher Education: Perspectives, challenges, and ethical considerations

Resti Tito H. Villarino

Local Research Ethics Committee, Cebu Technological University, Moalboal, Cebu, Philippines

Medical Sciences Division, National Research Council of the Philippines, Manila, Philippines

https://orcid.org/0000-0002-5752-1742

restitito.villarino@ctu.edu.ph

RESUMEN

La IA está remodelando rápidamente los panoramas de aprendizaje desde países altamente industrializados hasta aquellos que aún están en desarrollo, como Filipinas. Sin embargo, se han realizado estudios limitados sobre cómo estas herramientas de IA son adoptadas y percibidas por los estudiantes universitarios en un contexto de educación superior no urbano. Este estudio llena ese vacío investigando la adopción, percepciones e implicaciones éticas de las herramientas de IA entre estudiantes universitarios rurales filipinos a través de un enfoque de encuesta transversal de método mixto explicativo secuencial, basándose en 451 estudiantes de una universidad estatal rural en Cebú, Filipinas, de mayo a junio de 2024. Se utilizó IBM SPSS versión 26.0 para realizar los análisis estadísticos, mientras que los análisis temáticos se realizaron utilizando MAXQDA versión 2020. Entre los encuestados, todos habían utilizado herramientas de IA, mientras que la mayor proporción de estos estudiantes (78.54 %) utilizó ChatGPT. Además, los estudiantes creían firmemente que la IA era fácil de usar (M = 5.13; DE = ±1.58) y útil en su aprendizaje (M = 5.17; DE = ±1.53). Por el contrario, los estudiantes estaban preocupados por la información incorrecta o sesgada (M=5.35, DE=±1.40), el impacto en el pensamiento crítico (M=5.04, DE=±1.77) y el potencial de hacer trampa (M=5.39, DE=±1.50) al utilizar estas herramientas de IA. Además, solo el 17.29 % de los estudiantes conocía las políticas institucionales sobre el uso de la IA. Este estudio indica la necesidad de crear directrices institucionales claras para el uso de la IA, diseñar programas de alfabetización en IA y revisar la suposición sobre la brecha digital en las instituciones de educación superior rurales. Estos hallazgos también tienen implicaciones políticas en vista del desarrollo curricular y la ética para integrar la IA en contextos de educación superior y establecen la necesidad de estrategias educativas que aprovechen los beneficios ofrecidos por la IA mientras cultivan activamente las habilidades de pensamiento crítico y la integridad académica de los estudiantes.

PALABRAS CLAVE

Inteligencia Artificial; Tecnología Educativa; Educación Superior; Educación Rural; consideraciones Éticas; Filipinas.

ABSTRACT

AI rapidly reshapes learning landscapes from highly industrialized countries to those that are still in development, such as the Philippines. However, limited studies have been conducted on how such AI tools are adopted and perceived by college students within a non-urban higher education context. This study fills the gap by investigating the adoption, perceptions, and ethical implications of AI tools among rural Philippine college students through a sequential explanatory mixed-method cross-section survey approach, drawing its base from 451 students in a rural state college in Cebu, Philippines, from May to June 2024. IBM SPSS version 26.0 was used to conduct the statistical analyses, while theme analyses were done using MAXQDA version 2020. Among the respondents, all had used AI tools, while the greater proportion of these students (78.54 %) used ChatGPT. Further, the students strongly believed that AI was easy to use (M = 5.13; SD = ±1.58) and helpful in their learning (M = 5.17; SD = ±1.53). On the contrary, students were concerned about incorrect or biased information (M=5.35, SD=±1.40), impact on critical thinking (M=5.04, SD=±1.77), and potential for cheating (M=5.39, SD=±1.50) while utilizing these AI tools. Also, only 17.29 % of the students knew its institutional policies regarding the use of AI. This study indicates the essentiality of creating clear institutional guidelines for the use of AI, devising programs on AI literacy, and revisiting the assumption about the digital divide in rural higher education institutions. These findings also have policy implications in view of curriculum development and ethics for integrating AI into higher education contexts and carve out a need for educational strategies that make use of the benefits offered through AI while actively cultivating students’ critical thinking skills and academic integrity.

KEYWORDS

Artificial Intelligence; Educational Technology; Higher Education; Rural Education; Ethical considerations; Philippines.

1. INTRODUCTION

Artificial intelligence tools in higher education reshape the global learning landscape at a very fast pace, including for developing countries such as the Philippines (Estrellado & Miranda, 2023). Moreover, AI tools have made certain promises for a transformation into personalized learning, quick assessment, and tailored support (Khanduri & Teotia, 2023); however, AI cannot integrate into the educational system without controversy, even in rural areas with low levels of technological infrastructure and digital literacy (Celik, 2023).

AI adoption is still embryonic in the Philippines. There are wide disparities between urban and rural areas. Estrellado and Miranda (2023) indicate that circumspection or inquiries are needed in the future for AI implementation in the context of Philippine education. This digital divide is even more widened by Internet connectivity issues and generalized device scarcity in more rural parts of the Philippines (Whitelock-Wainwright et al., 2023).

In turn, intelligent tutoring systems and adaptive learning would promise tailored instruction at the pace and style of the individual student (Cai et al., 2022). For rural Filipino students who usually face resource constraints, these tools can mean access to educational experiences that weren’t previously available in their communities (Saadatzi et al., 2022). However, as observed by Asirit and Hua (2023), there is a wide variation in Philippine higher education in terms of readiness and utilization of AI; hence, there is an interest in the convergence of perspectives on AI integration.

On the other hand, the literature creates conflicting conclusions regarding the effectiveness and acceptance of AI in education. While some studies do report positive attitudes toward AI-driven educational tools (An et al., 2023; Welding, 2023), a number of key concerns have been raised by others (Limna et al., 2023; Montenegro-Rueda et al., 2023). For instance, among these were the reliability of the grading by these AI systems, possible biases in algorithms, and privacy concerns (Zhai et al., 2021). Obenza et al. (2023) have investigated the mediating effect of trust in AI on self-efficacy and attitude to AI in a Philippine setting through a survey of college students and have been found to have very intricate relationships that would require sustenance.

In addition, rural Philippine village values and ways of learning still cling to many preset cultural contexts that could fight the implementation of an AI tool like ChatGPT; hence, its acceptance and effectiveness could be mitigated (Zawacki-Richter et al., 2019). Goli-Cruz (2024) examined the attitude of HEI faculty toward using ChatGPT for educational purposes, and the results of his investigation showed that AI in education should be discussed from the point of view of the students and staff.

The unique socio-economic topography in rural parts of the Philippines further complicates the integration of AI in education, and socio-economic status is associated both with technology adoption and with educational outcomes (Villarino et al., 2023b). In relation to this, the relatively low financial input from institutions and individuals may make it hard to adopt state-of-the-art AI tools (Duan et al., 2023).

While studies deal with AI in education, main research gaps still exist in the perception of rural college students, particularly in the context of the Philippines (Gao et al., 2022). Previous research focused on either urban or developed settings; hence, the challenges and opportunities that rural Filipino students face need to be well documented. Besides, the mixed results of existing studies give more reason for context-specific research (Xue et al., 2024). In this respect, the paper tries to contextualize how rural college students understand and experience using AI tools in their academic pathways, addressing the prevailing gaps in the literature.

This cross-sectional study utilized the survey methodology to address, in particular, the following objectives: 1) assessed the awareness and usage of AI tools among rural Philippine college students; 2) examined the perceptions of usefulness, ease of use, and ethical concerns about AI tools among students; 3) explored experiences with AI tools for various academic tasks and frequency of use; 4) determined if the student respondents were aware of institutional policies and perceived needs for guidance or training on the use of AI; 5) evaluated the benefits and concerns related to the usage of AI tools in education; and 6) gathered information on expectations related to what the future impact of AI tools.

1.1. Theoretical Foundations

1.1.1 Artificial Intelligence (AI) in Education

Artificial Intelligence (AI) refers to machine learning algorithms designed to produce novel data samples that closely resemble existing datasets (Chan & Hu, 2023). In the educational context, AI encompasses various technologies, including Generative AI (GenAI) tools like ChatGPT, Google Gemini, and DALL-E, which are becoming increasingly prevalent in academic settings (Firoozabadi et al., 2023).

The integration of AI in education has seen significant developments since late 2022, with tools like ChatGPT, an autoregressive large language model with over 175 billion parameters, gaining prominence (Limna et al., 2023). These AI systems can generate human-like responses to text-based inputs and comprehend diverse information sources, including academic literature and online resources (Bhattacharya et al., 2023; Biswas, 2023; Kitamura, 2023).

1.1.2 Utilization of AI in Education: Advantages and Disadvantages

AI tools offer numerous benefits in educational settings. They can augment students’ learning experiences by providing innovative content and personalized feedback (Chan & Lee, 2023). AI applications like ChatGPT enable students to generate ideas, receive constructive criticism on their work, and engage in brain-stimulating activities that enhance cognitive abilities.

In research and academic writing, AI tools assist in idea generation, information synthesis, and textual summarization (Qasem, 2023). They also show promise in learning assessment, with tools like the Intelligent Essay Assessor used to evaluate students’ written assignments and provide timely feedback (Crompton & Burke, 2023). Mizumoto and Eguchi (2023) found that AI tools like ChatGPT can efficiently grade essays, maintain scoring uniformity, and offer immediate assessments, potentially revolutionizing teaching and learning methodologies.

However, integrating AI into education also presents challenges and ethical concerns. Kumar (2023) highlighted plagiarism and academic integrity issues, noting that AI-generated content may include improper references and a lack of personal opinions. There are also concerns about potential biases, inaccuracies, and the generation of misleading or harmful content (Harrer, 2023; Maerten & Soydaner, 2023). The reliance on AI tools may also impact critical thinking skills and create a dependency that could hinder genuine learning (Lubowitz, 2023).

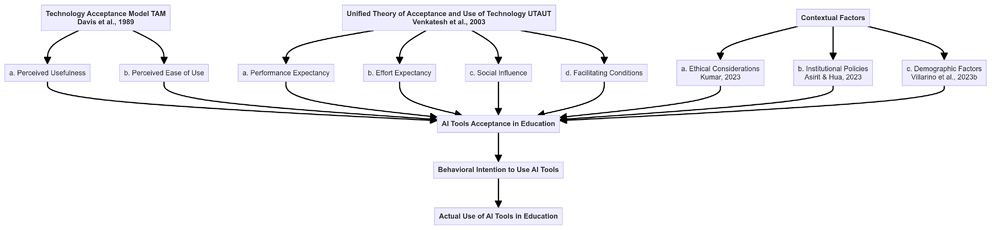

Figure 1. Theoretical Framework.

1.1.3 Students’ Perceptions of AI Tools in Education

Based on the literature review and the focus of this study, a theoretical framework can be constructed (Figure 1) using the Technology Acceptance Model (TAM) and the Unified Theory of Acceptance and Use of Technology (UTAUT) as foundational theories.

Students’ perceptions of AI tools significantly influence their adoption and use in academic settings. Factors such as perceived usefulness, ease of use, and ethical considerations are crucial in shaping these perceptions (Chan & Hu, 2023; Das et al., 2023). The Technology Acceptance Model (TAM) and the Unified Theory of Acceptance and Use of Technology (UTAUT) provide theoretical frameworks for understanding these factors.

Research has shown varied student responses to AI tools. Some studies report positive attitudes, with students finding AI-based chatbots helpful for educational assistance, improving learning outcomes, self-efficacy, and motivation (Gayed et al., 2022; Lee et al., 2022). Students appreciate the prompt responsiveness, interactive nature, and personalized support AI tools offer, but concerns persist among students regarding the potential impact of AI on employment opportunities and the reduction of human engagement in education (Biswas, 2023; Chan & Hu, 2023). Some students worry about becoming overly reliant on AI, potentially hampering their critical thinking skills or leading to academic dishonesty.

2. MATERIALS AND METHODS

2.1 Research Design

This study employed a sequential explanatory mixed-method cross-section survey approach to explore rural college students’ perspectives and experiences using artificial intelligence (AI) tools in the classroom. This design was chosen for its ability to capture not only the quantitative dimension through a structured questionnaire but also to navigate the qualitative domain explicitly utilizing validated open-ended questions that will support the quantitative data, providing a comprehensive snapshot of the respondents’ perspectives and experiences anchored on the American Psychological Association (2020) guidelines.

2.2 Setting and Samples

The study was conducted from May to June 2024 among college students enrolled in a rural state college in Cebu, Philippines, for the second semester of the academic year 2023-2024. A letter was sent to the Student Affairs Office (SAO) and the Supreme Student Council (SSG) prior to the study, and a voluntary response sampling method was employed. Furthermore, fourth-year students were excluded since they were on their OJT (on-the-job training), and students who did not agree to participate and students who did not sign the informed consent form (ICF) were also excluded.

An open invitation to participate in the study was distributed online to all eligible college students. This method allows for a self-selected sample based on respondents’ willingness and availability to participate in the research (Fricker, 2008; Sterba & Foster, 2008). After a week of open invitation, 455 students responded and initially provided their consent to participate. However, four students failed to submit their signed informed consent forms within the following two days, resulting in a final sample size of 451.

The achieved sample size of 451 exceeded the minimum required sample of 137, as determined using G*Power 3.1.9.7 for detecting a medium effect size in a mixed-method study. This sample size enhances the study’s statistical power and generalizability (Edmonds & Kennedy, 2017). While voluntary response sampling can be efficient and cost-effective, some of its limitations include 1. potential for self-selection bias (Bethlehem, 2010); 2. over-representation of individuals with strong opinions (i.e., those with particularly strong views on AI in education might be more likely to participate, potentially skewing results (Greenacre, 2016); and 3. limited generalizability (Etikan et al., 2016).

The limitations of voluntary response sampling, explicitly self-selection bias, as noted by Bethlehem 2010, were mitigated by the author by evaluating the potential for self-selection bias while analyzing the data and interpreting the results, bearing in mind how this may affect the findings as anchored by the study by Greenacre in 2016. Further, the author also compared the demographics of the study sample to those of the general student population at the institution, as suggested by Sterba and Foster 2008, as one way of evaluating representativeness.

In addition, the author also used triangulation methods of quantitative and qualitative data to make the research phenomena more comprehensive and, therefore, minimize the possibility of single-source bias, according to Edmonds & Kennedy (2017). Following the best practices of reporting identified by Taber (2018), declaring these limitations within the discussion of results and their implications helped to make it transparent regarding the constraints pertinent to the study.

2.3 Measurement and Data Collection

Data collection was conducted using a modified, contextualized, and validated questionnaire adapted from previously published research on the utilization of AI tools in the classroom (An et al., 2023; Chan & Hu, 2023; Das et al., 2023; Khanduri & Teotia, 2023; Kumar, 2023; Limna et al., 2023). The questionnaire was administered online via Google Forms.

Furthermore, the instrument comprised six sections: (1) socio-demographic characteristics; (2) beliefs about AI and Ethics (Chan & Hu, 2023; Welding, 2023); (3) use of AI tools (Limna et al., 2023; Tanvir et al., 2023); (4) perception of instructor and institutional policies on AI tools in education (Chan & Lee, 2023; Gayed et al., 2022); (5) concerns regarding AI use in the classroom (Chan & Hu, 2023; Tanvir et al., 2023); and (VI) overall perceptions on the utilization of AI tools in the classroom (Dahmash et al., 2020; Lee et al., 2022).

Also, the questionnaire was validated using the Research Instrument Validation Framework (RIVF) developed by Villarino (2024). Three experts validated the instrument: an educational technology professor, a language expert, and a researcher with expertise in education and social science. This multi-faceted approach ensured the questionnaire’s content validity, linguistic appropriateness, and construct reliability (Polit & Beck, 2021). Additionally, the internal consistency reliability was assessed using Cronbach’s alpha among a pilot group of 50 college students not included in the main study. The Cronbach’s alpha values for the six sections ranged from 0.72 to 0.84, indicating acceptable to high internal consistency reliability, with an overall score of 0.78 across all sections (Taber, 2018; American Psychological Association, 2020). These validation efforts strengthened the questionnaire’s validity, reliability, and relevance, enhancing the overall quality and significance of the research findings.

2.4 Data Analysis

Socio-demographic characteristics were expressed as frequencies and percentages. The college students’ beliefs, use of AI tools, perceptions, concerns, and overall perceptions were described as means with standard deviations. Moreover, thematic analysis was utilized to determine the common themes for the open-ended question section of the study explicitly in section 5 (future directions) and section 6 (additional feedback). All statistical analyses were performed using Statistical Package for Social Science (SPSS) software for Windows version 26.0 (IBM 2019) and MAXQDE version 2020 (Verbi Software 2019) for the thematic analysis.

2.5 Scoring Procedure

Table 1 indicates the scale with range and the corresponding verbal description and interpretation for the student respondents’ level of agreement on the 12 statements in section 2 on the perceptions of AI in education. After receiving the completed questionnaires, the researchers examined the responses for completeness and accuracy.

Table 1. Scoring range for the 7-point Likert scale (strongly disagree to strongly agree).

|

Scale |

Range |

Verbal Description |

Explanation |

|

7 |

6.16-7.00 |

SA (Strongly Agree) |

The level of agreeableness towards the statement is very high. |

|

6 |

5.30-6.15 |

A (Agree) |

The level of agreeableness towards the statement is high. |

|

5 |

4.44-5.29 |

SWA (Somewhat Agree) |

The level of agreeableness towards the statement is slightly high. |

|

4 |

3.58-4.43 |

N (Neutral) |

The level of agreeableness towards the statement is neither high nor low. |

|

3 |

2.72-3.57 |

SWD (Somewhat Disagree) |

The level of agreeableness towards the statement is slightly low. |

|

2 |

1.86-2.71 |

D (Disagree) |

The level of agreeableness towards the statement is low. |

|

1 |

1.00-1.85 |

SD (Strongly Disagree) |

The level of agreeableness towards the statement is very low. |

3. RESULTS

3.1 Respondents’ Profile

Table 2 presents the demographic profile of the student respondents. A total of 451 students provided data on AI tool usage, perceptions, and concerns, which will be explored in subsequent study sections. The insights gained from this diverse sample will contribute to our understanding of how AI is being integrated into and perceived within rural Philippine higher education contexts.

Table 2. Student Respondents Demographic Profile (n=451)

|

Characteristic |

Frequency (f) |

Percentage (%) |

|

Age |

||

|

20 |

36 |

7.98 |

|

21 |

173 |

38.36 |

|

22 |

179 |

39.69 |

|

23 |

45 |

9.98 |

|

24 |

13 |

2.88 |

|

25 |

4 |

0.89 |

|

26 |

1 |

0.22 |

|

Total |

451 |

100.00 |

|

Sex |

||

|

Male |

176 |

39.02 |

|

Female |

275 |

60.98 |

|

Total |

451 |

100.00 |

|

Course |

||

|

Computer Technology |

135 |

29.93 |

|

Automotive |

90 |

19.96 |

|

Electricity |

81 |

17.96 |

|

Education |

63 |

13.97 |

|

Hospitality Management |

36 |

7.98 |

|

Drafting |

18 |

3.99 |

|

Fishery Education |

14 |

3.10 |

|

Welding and Fabrication |

14 |

3.10 |

|

Total |

451 |

100.00 |

|

Year Level |

||

|

First Year |

59 |

13.08 |

|

Second Year |

180 |

39.91 |

|

Third Year |

212 |

47.01 |

|

Total |

451 |

100.00 |

The age distribution of respondents is predominantly concentrated between 21 and 22 years old, comprising 78.05 % of the sample (38.36 % for 21-year-olds and 39.69 % for 22-year-olds). This age range aligns with typical college student demographics in the Philippines, as observed by Villarino et al. (2023a) in their study on rural college students. The mean age of approximately 21.7 years is consistent with the expected age range for college students in the Philippines (Villarino et al., 2022a). This age concentration suggests a relatively homogeneous cohort in terms of generational experiences and technological exposure, which may influence their perceptions and adoption of AI tools (Xue et al., 2024).

The gender distribution reveals a higher proportion of female students (60.98 %) compared to male students (39.02 %). This disparity aligns with the general trend in Philippine higher education, as noted by Estrellado and Miranda (2023) in their study on AI in the Philippine educational context. The predominance of female students in higher education is a global trend that may have significant implications for AI adoption and usage patterns in academic settings (Whitelock-Wainwright et al., 2023).

The distribution of courses reflects a strong focus on technical and vocational programs, with Computer Technology (29.93 %), Automotive (19.96 %), and Electricity (17.96 %) being the top three courses. This emphasis on STEM and vocational fields is characteristic of state technological institutions in rural areas of the Philippines. Barajas et al. (2024) highlighted a similar trend in their assessment of AI integration in Philippine engineering programs. The significant proportion of students in technology-related fields (67.85 % in the top three courses) may positively influence the overall familiarity and comfort with AI tools in academic settings (Gao et al., 2022). However, it also raises questions about potential disparities in AI exposure and adoption across different academic disciplines, particularly in non-technical fields (Estrellado and Miranda, 2023).

The sample is predominantly composed of upper-level students, with third-year (47.01 %) and second-year (39.91 %) students making up the majority, while first-year students comprise only 13.08 %. This distribution allows for insights from students with more extensive exposure to various academic tasks and potentially greater experience with AI tools. The preponderance of upper-level students suggests that the perspectives gathered may be more informed by extended experience in higher education and possibly greater exposure to AI tools in academic contexts (Saadatzi et al., 2022). However, the relatively low representation of first-year students may limit insights into the initial adoption and perception of AI tools among newcomers to higher education.

3. 2 AI tool usage

The data on AI tool usage among rural Philippine college students are presented in Table 3. This information is vital for comprehending the current landscape of AI integration in higher education and provides insights into students’ familiarity with and utilization of AI tools for academic purposes.

Based on the data on the familiarity and usage of AI tools indicate 100 percent familiarity and usage of AI tools among respondents. This exceptionally high adoption rate surpasses findings from previous studies, such as those of Asirit and Hua (2023), who reported varying levels of AI readiness in Philippine higher education. This could indicate a rapid increase in AI tool adoption among rural Philippine college students or potentially a sample bias towards more tech-savvy students.

Moreover, the preferred AI tools among the respondents show that ChatGPT emerged as the dominant AI tool, used by 78.54 % of respondents. This aligns with global trends noted by Limna et al. (2023) regarding the popularity of large language models in education. The limited use of other AI tools (Google Gemini at 7.09 %, Gamma at 3.63 %) suggests a potential lack of diversity in AI tool exposure, which could be addressed through more comprehensive AI literacy programs, as suggested by Villaceran et al. (2024).

Also, a significant portion of students (39.03 %) reported using AI tools very often (multiple times a week), with another 34.81 % using them sometimes (once a month). This frequent usage indicates a high integration of AI tools into academic routines, supporting Zekaj’s (2023) assertion that AI tools are becoming educational allies. However, it also raises questions about potential over-reliance on AI, a concern echoed by Lubowitz (2023).

Table 3. AI tool usage among the respondents.

|

Statements |

Frequency (f) |

Percentage (%) |

|

1. Before this survey, were you familiar with AI tools designed to help with schoolwork? |

||

|

Yes |

451 |

100.00 |

|

No |

0 |

0.00 |

|

I’m not sure |

0 |

0.00 |

|

Total |

451 |

100.00 |

|

2. Have you ever used AI tools for academic purposes (e.g., assignments, essays, research)? |

||

|

Yes |

451 |

100.00 |

|

No |

0 |

0.00 |

|

I’m not sure what qualifies as an AI tool |

0 |

0.00 |

|

Total |

451 |

100.00 |

|

3. If you answered Yes to the previous question, which AI tools have you used for schoolwork? (Check all that apply) |

||

|

ChatGPT |

432 |

78.54 |

|

Google Gemini |

39 |

7.09 |

|

Claude |

0 |

0.00 |

|

Llama |

0 |

0.00 |

|

Minstral |

0 |

0.00 |

|

DALL-E |

0 |

0.00 |

|

Jasper |

0 |

0.00 |

|

Gamma |

20 |

3.63 |

|

Jenny AI |

0 |

0.00 |

|

Other (based on the responses include Cici, Perplexity, and Co-pilot) |

59 |

10.73 |

|

Total responses (multiple) |

550 |

100.00 |

|

4. How often do you typically use AI tools for academic purposes? |

||

|

Never |

0 |

0.00 |

|

Rarely (a few times a semester) |

59 |

13.08 |

|

Sometimes (once a month) |

157 |

34.81 |

|

Often (once a week) |

59 |

13.08 |

|

Very often (multiple times a week) |

176 |

39.03 |

|

Total |

451 |

100.00 |

|

5. For which of the following academic tasks have you used AI tools? (Check all that apply) |

||

|

Researching information |

353 |

34.61 |

|

Generating ideas or outlines |

216 |

21.18 |

|

Writing essays or assignments |

157 |

15.39 |

|

Editing and proofreading |

98 |

9.61 |

|

Solving math or science problems |

59 |

5.78 |

|

Creating presentations or visual content |

137 |

13.43 |

|

Other |

0 |

0.00 |

|

Total responses (multiple) |

1020 |

100.00 |

With regards to academic tasks and AI usage, researching information (34.61 %) and generating ideas or outlines (21.18 %) were the most common tasks for which students used AI tools. This usage pattern aligns with the findings of Qasem (2023) on the benefits of AI in idea generation and information synthesis. However, the significant use of AI for writing essays or assignments (15.39 %) raises ethical concerns about academic integrity, as highlighted by Kumar (2023).

3. 3 Students’ Perceptions of AI in Education

Table 4 presents the overall computed mean with standard deviations (SD) of students’ perceptions regarding AI tools in education. The data provides insights into students’ attitudes towards ease of use, helpfulness, ethical considerations, and potential impacts of AI tools in their academic pursuits. In terms of ease of use and helpfulness, students generally find AI tools easy to use (Mean=5.13, SD=±1.58) and helpful in their learning (Mean=5.17, SD=±1.53). This aligns with findings from Chan and Hu (2023), who reported positive perceptions of AI tools among college students. The ease of use is particularly emphasized in the rural Philippines, suggesting that students can navigate these technologies despite potential infrastructure challenges.

Furthermore, on the impact of AI tools on work quality and time management, respondents somewhat agree that AI tools improve their work quality (Mean=5.00, SD=±1.68) and save time (Mean=5.13, SD=±1.60). This supports Zekaj’s (2023) assertion that AI tools are becoming valuable educational allies. However, the standard deviation indicates some variability in opinions, possibly reflecting differences in individual experiences or access to AI tools.

Table 4. Overall Mean with SD on the students’ perceptions of AI in Education.

|

Statements |

Mean |

Standard Deviation (SD) |

Description |

|

1. I find AI tools easy to use for my schoolwork. |

5.13 |

±1.58 |

SWA |

|

2. AI tools have been helpful in my learning. |

5.17 |

±1.53 |

SWA |

|

3. Using AI tools has improved the quality of my work. |

5.00 |

±1.68 |

SWA |

|

4. AI tools save me time when doing schoolwork. |

5.13 |

±1.60 |

SWA |

|

5. I am confident in using AI tools effectively for academic tasks. |

4.83 |

±1.34 |

SWA |

|

6. I believe using AI tools for schoolwork is generally ethical. |

4.83 |

±1.40 |

SWA |

|

7. Most of my classmates think using AI tools for schoolwork is okay. |

5.17 |

±1.34 |

SWA |

|

8. AI tools can help me learn in a way tailored to my needs. |

5.35 |

±1.07 |

A |

|

9. AI tools could eventually replace the need for teachers in some subjects. |

5.13 |

±1.14 |

SWA |

|

10. I am concerned that AI tools might give me incorrect or biased information. |

5.35 |

±1.40 |

A |

|

11. I worry that relying on AI tools might make it harder for me to learn to think critically. |

5.04 |

±1.77 |

SWA |

|

12. I think using AI tools for schoolwork could lead to cheating or plagiarism. |

5.39 |

±1.50 |

A |

Description: SD (Strongly Disagree): 1.00-1.85; D (Disagree): 1.86-2.71; SWD (Somewhat Disagree): 2.72-3.57; N (Neutral): 3.58-4.43; SWA (Somewhat Agree): 4.44-5.29; A (Agree): 5.30-6.15; SA (Strongly Agree): 6.16-7.00.

Additionally, students express moderate confidence in using AI tools effectively (Mean=4.83, SD=±1.34) and in the ethical nature of their use (Mean=4.83, SD=±1.40). This moderate confidence level aligns with Asirit and Hua’s (2023) findings on varying AI readiness in Philippine higher education. The ethical considerations echo the concerns Kumar (2023) raised regarding academic integrity and transparency in the age of AI.

On the statement concerning peer perception and personalized learning, there is a strong perception that peers accept AI tool use (Mean=5.17, SD=±1.34), and students agree that AI can provide tailored learning experiences (Mean=5.35, SD=±1.07). This latter point supports An et al.’s (2023) findings on the potential of AI for personalized education. Moreover, regarding AI’s potential to replace teachers, students somewhat agree that AI could replace teachers in some subjects (Mean=5.13, SD=±1.14). This perception contrasts with Estrellado and Miranda’s (2023) caution about the need for human oversight in AI implementation in Philippine education.

Lastly, about AI concerns and risks, students agree with concerns about incorrect or biased information (Mean=5.35, SD=±1.40), impact on critical thinking (Mean=5.04, SD=±1.77), and potential for cheating (Mean=5.39, SD=±1.50). These concerns align with issues raised by Harrer (2023) and Lubowitz (2023) regarding the potential drawbacks of AI in education.

3. 4 Institutional Policies and Awareness of AI Use.

The succeeding tables (Tables 5 and 6) present data on student respondents’ awareness of institutional policies and their perceptions regarding AI use in education. Table 5 includes students’ perceptions of the institutional policies and awareness of AI use (statements 1,3,4 and 5). Moreover, Table 6 further presents the common themes in statement 2 regarding a brief description of these institutional policies, rules, or guidelines concerning using AI in the classroom, if any.

Table 5. Students’ perceptions of the Institutional Policies and Awareness of AI use.

|

Statement |

Frequency (f) |

Percentage (%) |

|

1. Are you aware of any rules or guidelines at your school about using AI tools in your classes or assignments? |

||

|

Yes |

78 |

17.29 |

|

No |

235 |

52.11 |

|

I’m not sure |

138 |

30.60 |

|

Total |

451 |

100.00 |

|

3. Have your instructors talked about using AI tools in your classes? |

||

|

Yes, we’ve had in-depth discussions about it |

0 |

0.00 |

|

Yes, it’s been mentioned briefly |

157 |

34.81 |

|

No, it’s not been mentioned |

235 |

52.11 |

|

I’m not sure |

59 |

13.08 |

|

Total |

451 |

100.00 |

|

4. Should your school offer more information or training on using AI tools ethically and responsibly for schoolwork? |

||

|

Yes |

333 |

73.84 |

|

No |

0 |

0.00 |

|

I’m not sure |

118 |

26.16 |

|

Total |

451 |

100.00 |

|

5. If you answered Yes to the previous question, what kind of information or training would be most helpful? (Check all that apply) |

||

|

Workshops or tutorials on how to use different AI tools |

176 |

20.05 |

|

Clear guidelines on what’s considered ethical AI use in schoolwork |

251 |

28.59 |

|

Examples of how AI tools can be used in specific classes or assignments |

255 |

29.04 |

|

Information on the potential risks and limitations of AI tools |

196 |

22.32 |

|

Other |

0 |

0 |

|

Total responses (multiple) |

878 |

100.00 |

As can be gleaned in Table 4, awareness of institutional policies in using AI indicates that only 17.29 % of respondents know any rules or guidelines about using AI tools in their classes or assignments. This low awareness aligns with findings from Asirit and Hua (2023), who noted varying levels of AI readiness in Philippine higher education institutions. The majority (52.11 %) reported no awareness, while 30.60 % were unsure, indicating a significant gap in institutional communication about AI policies.

Moreover, more than half of the respondents (52.11 %) reported that their instructors had not mentioned AI tools in classes, while 34.81 % said it had been discussed briefly. This lack of in-depth discussion suggests that many educators may not be fully prepared to integrate AI into their teaching, as Estrellado and Miranda (2023) highlighted in their study on AI in the Philippine educational context. Furthermore, a substantial majority (73.84 %) of students believe their school should offer more information or training on using AI tools ethically and responsibly. This high demand for guidance aligns with Villaceran, Rioflorido, and Paguiligan’s (2024) findings, emphasizing the need for comprehensive AI literacy programs in educational settings.

Lastly, among those who want more information or training, there’s a strong preference for examples of AI use in specific classes (29.04 %) and clear ethical guidelines (28.59 %). This aligns with Kumar’s (2023) emphasis on the importance of ethical considerations in AI use. The interest in workshops (20.05 %) and information on risks and limitations (22.32 %) further highlights students’ desire for a comprehensive understanding of AI tools.

The thematic analysis, as presented in Table 5, indicates the responses to the open-ended question about institutional rules or guidelines on AI use revealed three primary themes (referencing and attribution, ethical use, and uncertainty).

Table 6. Thematic Analysis of Responses to Question 2 - Awareness of AI Usage Rules and Guidelines.

|

Themes |

Description |

Actual Responses |

|

1. Referencing and Attribution |

Need to cite AI sources or acknowledge AI use. |

That we should acknowledge the AI tool if we use it and include it as a reference (Respondent 13) Acknowledge the original author, or don’t copy the whole idea of AI. (Respondent 15) Using AI can be plagiarism if not properly cited. (Respondent 16) |

|

2. Ethical Use |

Guidelines on the appropriate use of AI tools. |

Using AI tools like ChatGPT is okay, but too much use is not okay. It will help if you use critical thinking. (Respondent 18) It should be wise to think about the negative side of using AI. (Respondent 9) Be appropriate and use AI responsibly. (Respondent 12) |

|

3. Uncertainty |

Lack of explicit knowledge about rules. |

Balance benefits and risks. (Respondent 17) Some information is not accurate. (Respondent 10) I’m unsure, but we need to be cautious when using AI. (Respondent 19) |

Concerning referencing and attribution, this theme emerged as a significant concern among students who are aware of AI guidelines. The responses highlight a spectrum of comprehension regarding attribution. Respondent 13 demonstrates a detailed awareness of the need for proper citation, while Respondent 15 emphasizes the importance of acknowledging sources. Respondent 16’s comment directly links improper AI use to plagiarism, indicating an understanding of the potential academic integrity issues. This range of responses aligns with Kumar’s (2023) findings on the growing importance of addressing AI-related academic integrity in higher education. However, only a few respondents mentioned that clear guidelines on AI attribution may not be widespread or well-communicated in rural Philippine higher education institutions.

Moreover, the second theme focused on the ethical implications of AI use in academic settings. Respondent 18’s comment reflects a nuanced understanding that while AI tools are acceptable, they should not replace original thinking. This aligns with Chan and Hu’s (2023) findings on students’ balanced views of AI in education. Respondent 9’s emphasis on wise thinking suggests an awareness of the potential complexities involved in AI use. In contrast, Respondent 12’s call for appropriate and responsible use indicates an understanding of the ethical dimensions of AI in education. These varied responses highlight the need for comprehensive ethical guidelines that address the multi-faceted nature of AI use in academic settings.

Lastly, the responses under the theme (uncertainty) reveal a lack of clear understanding of AI-related rules. Respondent 17’s comment about balancing benefits and risks suggests an awareness of AI’s potential advantages and drawbacks but uncertainty about navigating them. Respondent 10’s statement about the possible unreliability of AI-generated information underscores the need for critical thinking skills when using these tools. Respondent 7’s explicit uncertainty about the existence of guidelines is particularly telling, highlighting the gap in communication of AI policies. This uncertainty echoes Asirit and Hua’s (2023) findings on varying levels of AI readiness in Philippine higher education institutions.

The diversity of responses within each theme suggests that while some students have a basic grasp of AI-related ethical considerations, there is a lack of consistent, clear guidelines across institutions. This variability in understanding could lead to uneven application of AI tools and potential academic integrity issues. Furthermore, these responses represent only a small fraction of the sample (with the majority providing no response or indicating no awareness of guidelines), emphasizing the significant gap in AI policy implementation and communication in rural Philippine higher education institutions. This aligns with Estrellado and Miranda’s (2023) call for more comprehensive AI education and policy communication in Philippine educational contexts.

3. 5 Future Perspectives

Table 7 presents a thematic analysis of the college students’ responses regarding their perspectives on how AI tools will change education in the next 5-10 years. This analysis provides crucial insights into students’ expectations, hopes, and concerns about the future of AI in education, which is essential for understanding the potential impact and challenges of AI integration in rural Philippine higher education settings.

A significant theme that emerged from the responses is the potential of AI to personalize learning experiences. Respondent 3 highlights AI’s capacity to transform education systems, making them more equitable and allowing teachers to focus on social-emotional aspects of learning. This aligns with the findings of An et al. (2023), who noted the potential of AI for creating individualized learning experiences.

Table 7. Thematic Analysis of Responses to Section 5: Future Perspectives.

|

Theme |

Description |

Actual Responses |

|

1. Personalized Learning |

AI’s potential to tailor education to individual needs. |

AI could transform education systems and make them more equitable, freeing up teachers’ time so they could focus on social-emotional learning. (Respondent 3) Artificial intelligence (AI) has the potential to revolutionize higher learning in the next five to ten years in numerous ways. Like Personalized learning: AI can create personalized learning experiences for individual students. Analyzing data on a student’s performance, preferences, and learning style. (Respondent 6) AI tools will help teachers teach better and students learn more by giving personalized help and making lessons more engaging. (Respondent 5) |

|

2. Efficiency and Convenience |

AI is making education faster and easier. |

To make life easier. (Respondent 7) It can make work faster. (Respondent 8) It will be commonly used, making the student’s life much easier. (Respondent 10) |

|

3. Concerns about Over-reliance |

Worries about students becoming too dependent on AI. |

It makes students lazy about studying and makes their lives easier. (Respondent 1) Since using AI, school tasks and activities have become more accessible; possibly, this will be why people depend on AI. (Respondent 12) Students will be too lazy to study in a traditional method; instead, they will rely on AI. (Respondent 22) |

Respondent 6 provides a more detailed perspective, mentioning AI’s ability to analyze student performance, preferences, and learning styles to create tailored experiences. This vision of AI-driven personalized learning supports Zekaj’s (2023) assertion that AI tools are becoming valuable educational allies.

Moreover, most respondents emphasized AI’s potential to make education more efficient and convenient. Responses like: “To make life easier” (Respondent 7) and “It can make work faster” (Respondent 8) reflect students’ expectations that AI will streamline educational processes. This theme aligns with the findings of Welding (2023), who reported positive attitudes toward AI-driven educational tools among students. However, this expectation of increased convenience also raises questions about the balance between efficiency and deep learning, a concern echoed in the literature by Lubowitz (2023).

Lastly, a recurring theme in the responses was the concern about potential over-reliance on AI tools. Comments like it makes students lazy about studying (Respondent 1) and students will be lazy to study using a traditional method. Instead, they will rely on AI (Respondent 22) to reflect apprehensions about AI potentially diminishing students’ initiative and critical thinking skills. This concern aligns with the findings of Kumar (2023) regarding the potential impact of AI on academic integrity and independent thinking.

3. 6 Additional Feedback

Table 8 includes the thematic analysis of the college students’ additional feedback regarding their experiences and thoughts on using AI tools in education. This analysis provides valuable insights into students’ nuanced perspectives on AI integration in their academic lives, highlighting the perceived benefits and concerns associated with these tools.

Table 8. Thematic Analysis of Responses to Additional Feedback.

|

Theme |

Description |

Actual Response |

|

1. Usefulness with Caution |

Recognizing AI’s benefits while acknowledging its limitations |

AI tools in education can make learning fun, personalized, and effective for everyone involved, shaping a brighter future for students and teachers. But use it responsibly. (Respondent 7) It’s okay to use AI tools because they help me get ideas. (Respondent 16) AI is useful for me, but sometimes, relying too much on AI makes me lose my wayof thinking about my idea. (Respondent 23) |

|

2. Accuracy Concerns |

Worries about the reliability of AI-generated information |

Based on my experience, AI will give you the answers, but sometimes the answer is not related to the question. That’s why I do not fully trust the AI. (Respondent 3) Not all AI tools give accurate data, so be vigilant and recheck. (Respondent 10) I can only share that AI tools do notgive you the perfect answer. (Respondent 20) |

|

3. Balancing AI Use and Critical Thinking |

Emphasizing the need to use AI as a tool without over-reliance |

I want to share that it’s okay to use AI, but we should know its limitations because it could affect us students if we use inappropriately. (Respondent 12) Using AI makes our lives easier, especially for students, but sometimes we need to use our brains to answer questions. Don’t depend on AI for a perfect answer. (Respondent 13) |

A consistent theme that emerged from the responses is the recognition of AI’s benefits in education, coupled with an awareness of the need for responsible use. Respondent 7’s comment encapsulates this theme well, highlighting AI’s potential to make learning fun, personalized, and practical while emphasizing the importance of responsible use. This balanced perspective aligns with the findings of Chan and Hu (2023), who noted that students often have nuanced views on the benefits and challenges of AI in education.

Furthermore, several respondents expressed concerns about the reliability and accuracy of AI-generated information. Respondent 3’s comment about not fully trusting AI due to occasionally irrelevant answers highlights a critical awareness of AI’s limitations. This theme resonates with the findings of Kumar (2023) regarding the potential risks of AI in academic settings, particularly regarding information accuracy. The prevalence of this theme suggests that the student respondents are not passive consumers of AI technology but are actively engaging with it critically. This critical approach could be leveraged in developing AI literacy programs, as suggested by Villaceran et al. (2024), to help students better navigate the strengths and limitations of AI tools.

The third theme reflects students’ recognition of the need to balance AI use with their critical thinking skills. Respondent 13’s comment (translated from Cebuano) emphasizes that while AI can make student life easier, it’s crucial to use one’s brain and not depend entirely on AI for answers. This perspective aligns with Lubowitz’s (2023) concerns about potential over-reliance on AI in academic settings. This theme suggests that rural Philippine college students are aware of the potential for AI to supplement rather than replace critical thinking skills. This awareness could be a valuable foundation for developing educational strategies integrating AI tools while fostering independent thinking and problem-solving skills.

4. DISCUSSION

This study aimed to explore rural Philippine college students’ perspectives and experiences with AI tools in education, addressing an important gap in comprehending how these AI technologies are perceived and utilized in non-urban, developing country contexts.

4.1 Demographic profile and ai tool usage

The study’s demographic profile, with a majority of respondents aged 21-22 (78.05 %) and a higher proportion of female students (60.98 %), aligns with typical college student demographics in the Philippines (Villarino et al., 2022a; Villarino et al., 2022b). Future research could explore whether age and gender differences influence AI tool preferences, usage frequencies, or perceived benefits and risks.

The sample’s predominance of technical and vocational programs reflects the educational priorities in rural areas, as Barajas et al. (2024) noted. This context is crucial for interpreting the study’s high AI tool awareness and usage levels. Moreover, the concentration of respondents in technical fields and upper-year levels suggests a sample that may be more technologically inclined and experienced with academic practices. While this may lead to more informed perspectives on AI tools, it also elucidates the need for future research to explore AI adoption among a more diverse range of academic disciplines and year levels (Estrellado and Miranda, 2023).

Moreover, 100 percent of respondents reported familiarity with and usage of AI tools for academic purposes, significantly higher than previous findings in Philippine higher education (Asirit & Hua, 2023). This universality of AI tool adoption contradicts assumptions about the digital divide in rural areas and suggests rapid technological integration in these settings. However, it also raises questions about potential sample bias toward more tech-savvy students, a limitation that future research should address, as discussed in section 4.8.

The preference for ChatGPT (78.54 % of respondents) aligns with global trends noted by Limna et al. (2023) but indicates a potential lack of diversity in AI tool exposure. This heavy reliance on a single tool may limit students’ understanding of AI’s broader capabilities and risks in education (Villaceran et al., 2024).

4.2 Perceptions and ethical considerations

Students generally expressed positive attitudes towards AI tools, finding them easy to use (Mean=5.13, SD=±1.58) and helpful in learning (Mean=5.17, SD=±1.53). These findings support Chan and Hu’s (2023) observations on students’ positive perceptions of AI in education. However, the study also revealed significant concerns about AI’s potential for providing incorrect or biased information (Mean=5.35, SD=±1.40) and its impact on critical thinking skills (Mean=5.04, SD=±1.77).

The ethical implications of AI in education emerged as a central theme in this study, echoing broader debates in the field. Students expressed strong concerns about AI tools facilitating cheating and plagiarism (M=5.39; SD±1.50), aligning with Kumar’s (2023) findings on the challenges AI poses to academic integrity.

The ease with which AI can produce essays or solve complex problems raises questions about the authenticity of student work and the fairness of assessments. While this study found high adoption rates of AI even in rural settings, broader issues of educational equity cannot be ignored. Asirit and Hua (2023) highlight how the digital divide in rural Philippines can create unequal opportunities for AI tool use. Harrer (2023) warns that AI systems could reflect, perpetuate, or amplify societal biases if not carefully designed and implemented.

The potential erosion of critical thinking skills due to over-reliance on AI tools emerged as another significant concern, reflecting a broader educational debate about AI’s role in cognitive development (Holmes et al., 2022). While AI offers benefits through personalized learning and immediate feedback (Cai et al., 2022), there are apprehensions about its impact on autonomous problem-solving abilities (Lubowitz, 2023). Although not explicitly measured in our study, the literature suggests growing concerns about data privacy and security in AI-enhanced educational environments (Crompton & Burke, 2023), an area that future research should explore in rural contexts.

Additionally, the “black box” nature of many AI algorithms raises ethical questions about transparency and accountability in educational decision-making (Zhai et al., 2021), particularly relevant in rural areas where technological literacy may be lower. These ethical considerations emphasize the need for comprehensive AI literacy programs and clear institutional guidelines on AI use in academic settings.

4.3 Institutional policies and awareness

A salient finding was the low awareness of institutional policies regarding AI use, with only 17.29 % of respondents aware of any rules or guidelines. This lack of awareness, coupled with high usage rates, highlights a critical policy communication and implementation gap in rural Philippine higher education institutions. It aligns with Estrellado and Miranda’s (2023) call for more comprehensive AI education and policy communication in Philippine educational contexts.

The strong desire for more information and training on ethical AI use (73.84 % of respondents) emphasizes the need for structured AI literacy programs. This finding supports Villaceran et al.’s (2024) emphasis on comprehensive AI education in curriculum development.

4.4 Future perspectives and concerns

The thematic analysis of students’ future perspectives revealed a mixed view of AI’s role in education. Concerns about over-reliance and the potential erosion of traditional study skills balanced optimism about AI’s potential for personalized learning and efficiency. This duality reflects the global discourse on AI in education, as highlighted by Crompton and Burke (2023), but anchored on a distinct rural Philippine context. The concern about laziness and over-dependence on AI tools may be particularly pronounced in this context, where traditional educational values might be more deeply entrenched.

4.5 Implications for philippine higher education

The findings of this study have several significant implications for the integration of AI in rural Philippine higher education. Foremost, there is an urgent need for clear, well-communicated institutional policies on AI use in academic settings. These policies would provide a framework for ethical and effective AI utilization, addressing the current gap in student awareness of guidelines.

Alongside policy development, the implementation of comprehensive, culturally sensitive AI literacy programs is essential. These programs should address both the benefits and risks of AI tools in education, equipping students with the knowledge and skills to navigate the evolving technological landscape. Furthermore, the study highlights the importance of diversifying AI tool exposure beyond a single dominant platform. Broadening students’ experiences with various AI tools is crucial to enhancing their understanding of AI capabilities and limitations, fostering a more nuanced perspective on AI’s role in education.

Lastly, the high AI adoption rates observed in this study present a unique opportunity. Educators and institutions can leverage this enthusiasm to enhance educational outcomes while simultaneously addressing concerns related to critical thinking and academic integrity. By thoughtfully integrating AI into the curriculum and teaching practices, rural Philippine higher education institutions can harness the potential of these tools while mitigating potential drawbacks, ultimately preparing students for a future where AI is increasingly prevalent in both academic and professional settings.

4.6 Balancing ai tools’ benefits and risks in rural philippine higher education context

This study provides insights into the complex dynamics of AI adoption and perception among rural Philippine college students. The high adoption rate challenges assumptions about the digital divide in rural areas, aligning with recent research by Xue et al. (2024) on rapid AI tool adoption among college students globally.

The tension between perceived benefits and potential risks emphasizes the need for balanced and ethically informed practices in integrating AI into educational settings, as emphasized by Kumar (2023). The low awareness of institutional policies (17.29 %) elucidates the need for improved policy communication, echoing Estrellado and Miranda’s (2023) call for comprehensive AI education in Philippine contexts.

Students’ dichotomous insights into AI’s role in education – recognizing its benefits while expressing concerns about impacts on critical thinking and academic integrity – present both challenges and opportunities for institutions. The strong desire for more information on ethical AI use (73.84 % of respondents) indicates an opportunity for targeted AI literacy programs, as suggested by Villaceran et al. (2024).

4.7 Applicability of results to diverse educational settings

While this study focuses on rural Philippine college students, its findings have potential implications for a broader range of educational contexts. The high adoption rate of AI tools and the concerns raised by students offer insights that may be relevant to various educational settings, both within and beyond the Philippines.

In developing countries, the rapid adoption of AI tools observed in this rural setting challenges assumptions about the digital divide. Similar patterns might be observed in other developing nations, particularly in areas where mobile technology has leapfrogged traditional infrastructure development. Educators and policymakers in countries with comparable socio-economic profiles to the Philippines could benefit from these insights when planning AI integration strategies (Zawacki-Richter et al., 2019). Although this study focused on rural students, the high AI adoption rates and the concerns raised (e.g., impact on critical thinking, potential for cheating) are likely to be relevant in urban settings as well. Urban institutions might face similar challenges in developing ethical guidelines and ensuring responsible AI use (Crompton & Burke, 2023).

In the context of global higher education, the tension between perceived benefits and risks of AI use, as well as the low awareness of institutional policies, are issues that likely transcend geographical boundaries. Higher education institutions worldwide could use these findings to inform their AI policy development and communication strategies (Saadatzi et al., 2022). Given the predominance of technical and vocational programs in our sample, our findings may be particularly relevant to similar institutions globally. The high AI adoption rates in these fields suggest a need for AI literacy programs that are tailored to practical, skill-based educational contexts (Barajas et al., 2024).

The ethical concerns raised by students in this study, such as the potential for AI to provide incorrect information or facilitate cheating, are universal issues in AI ethics. These findings could inform the development of AI ethics curricula across various educational levels and settings (Harrer, 2023). Furthermore, the gap between high AI adoption and low awareness of institutional policies highlighted in this study may be indicative of broader challenges in regulating emerging technologies in educational settings. Policymakers in various contexts could draw on these findings when developing responsive and adaptive regulatory frameworks (Duan et al., 2023).

However, the direct applicability of these results may vary depending on specific cultural, socio-economic, and technological contexts. Factors such as internet connectivity, device availability, cultural attitudes toward technology, and existing educational policies will all influence how AI is adopted and perceived in different settings.

4.8 Limitations and future research

While this study contributes valuable insights, several limitations should be considered when interpreting its findings. The study’s focus on a single rural state college in the Philippines potentially limits its generalizability to other rural areas of the Philippines or diverse cultural settings. Also, the voluntary response sampling method may have led to an overrepresentation of students with strong opinions or interest in AI, introducing a self-selection bias (Etikan et al., 2016). Moreover, the 100 % AI tool usage rate among respondents suggests a potential technology bias, possibly inflating estimates of technology diffusion rates in the broader rural student population (Bethlehem, 2010).

The strong preference for ChatGPT (78.54 %) among respondents limits insights into interactions with diverse AI tools, while the cross-sectional nature of the study may not fully capture the rapidly evolving landscape of AI in education. Lastly, the unique socio-economic and cultural context of rural Philippines may restrict the generalizability of findings to other settings.

To address these limitations, future research can employ the following strategies: 1. implementing stratified random sampling could ensure better representation across levels of technological familiarity and access; 2. conducting multi-site studies across various rural and urban settings in the Philippines and other developing countries would enhance the generalizability of findings; 3. Researchers can consider using mixed-mode surveys with both online and offline data collection methods to capture a more diverse range of respondents; 4. incorporating in-depth interviews or focus groups could provide richer qualitative insights into students’ experiences and perceptions; 5. longitudinal studies would be valuable in observing changes in perceptions and usage patterns over time, given the rapid evolution of AI technologies; and 6. performing non-response bias analysis could help understand the characteristics of students less likely to participate in technology-based studies, providing a more comprehensive picture of AI adoption and perception in rural higher education settings.

Furthermore, future research could explore how these findings translate to different geographical, cultural, and institutional contexts. Comparative studies between rural and urban settings, between different countries, or across various types of educational institutions would provide a more comprehensive understanding of AI adoption and perception in global education. Such research would help in developing more universally applicable strategies for integrating AI into education while addressing the ethical and practical concerns raised in this study. These expanded research directions would not only address the limitations of the current study but also contribute to a broader, more nuanced understanding of AI’s role in diverse educational contexts worldwide.

5. CONCLUSION

This study indicates a high adoption and integration rate of AI tools in academic life among rural college student respondents in Cebu, Philippines, along with a fundamental comprehension of the benefits and risks. As AI continues to reshape the educational landscape, the study’s findings indicate the importance of creating clear institutional guidelines on the use of AI, devising programs on AI literacy, and revisiting the assumption about the digital divide in rural higher education institutions. Moreover, these findings also have policy implications in relation to curriculum development and ethics for integrating AI into higher education contexts and carve out a need for educational strategies that make use of the benefits offered through AI while actively cultivating students’ critical thinking skills and academic integrity.

ETHICAL CONSIDERATIONS

The study protocol (i.e., research methodology) adhered to the 2022 National Ethical Guidelines for Health and Health-Related Research, ensuring the protection of participants’ rights, privacy, and confidentiality, and informed consent was obtained from all respondents through their signatures on the online Informed Consent Form (ICF) (Philippine Council for Health Research and Development 2017).

AVAILABILITY OF DATA AND MATERIALS

The data analyzed during the present study and support the findings are available from the author [RTV] upon request.

ACKNOWLEDGMENT

The author would like to thank the student respondents for participating in this study and for spending valuable time and effort in completing the questionnaire and participating in this study.

REFERENCES

American Psychological Association. (2020). Publication manual of the American Psychological Association (7th ed.). https://doi.org/10.1037/0000165-000

American Psychological Association. (2023, June). How to use ChatGPT as a learning tool. APA Monitor. https://www.apa.org/monitor/2023/06/chatgpt-learning-tool

, , , , , , & (2023). Modeling English teachers’ behavioral intention to use artificial intelligence in middle schools. Education and Information Technologies, 28(5), 5187–5208. https://doi.org/10.1007/s10639-022-11286-z

, & (2023). Converging perspectives: Assessing AI readiness and utilization in Philippine higher education. Philippine Journal of Science and Research in Technology, 2(3), 152-165. https://doi.org/10.58429/pgjsrt.v2n3a152

, & (2023). Women’s knowledge of sexually transmitted diseases in Telafer City, Iraq. Nurse Media Journal of Nursing, 13(1), 12–21. https://doi.org/10.14710/nmjn.v13i1.48924

, , , , , , & (2024). Exploratory data analysis of artificial intelligence integration in Philippine engineering programs offered by state universities and colleges: A preliminary assessment. In IEEE Systems and Information Engineering Design Symposium (SIEDS) (pp. 1-6). IEEE. https://doi.org/10.1109/sieds61124.2024.10534634

(2010). Selection bias in web surveys. International Statistical Review, 78(2), 161-188. https://doi.org/10.1111/j.1751-5823.2010.00112.x

, , , , , & (2023). ChatGPT in surgical practice—A new kid on the block. Indian Journal of Surgery.85, 1346-1349. https://doi.org/10.1007/s12262-023-03727-x

(2023). ChatGPT and the future of medical writing. Radiology, 307(2), Article e223312. https://doi.org/10.1148/radiol.223312

, , , , , , , , , , & (2023). The mediating effect of AI trust on AI self-efficacy and attitude toward AI of college students. International Journal of Management, 2(1), 2286. https://doi.org/10.54536/ijm.v2i1.2286

, , , & (2022). Artificial intelligence education for K-12 in the AI era: A systematic review and future directions. Frontiers in Psychology, 13, Article 818805. https://doi.org/10.3389/fpsyg.2022.818805

(2023). Towards Intelligent-TPACK: An empirical study on teachers’ professional knowledge to ethically integrate artificial intelligence (AI)-based tools into education. Computers in Human Behavior, 138, Article 107468. https://doi.org/10.1016/j.chb.2022.107468

, & (2023). The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and Millennial generation teachers? Smart Learning Environments,10, 60. https://doi.org/10.1186/s40561-023-00269-3

, & (2023). Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. International Journal of Educational Technology in Higher Education, 20(1), Article 43. https://doi.org/10.1186/s41239-023-00411-8

, & (2023). Artificial intelligence in higher education: The state of the field. International Journal of Educational Technology in Higher Education, 20(1), Article 22. https://doi.org/10.1186/s41239-023-00392-8

, , , , , , , & (2020). Artificial intelligence in radiology: Does it impact medical students preference for radiology as their future career? BJR|Open, 2(1), Article 20200037. https://doi.org/10.1259/bjro.20200037

, , & (2023). The impact of AI-driven personalization on learners’ performance. International Journal of Computer Sciences and Engineering, 11, 15–22. https://doi.org/10.26438/ijcse/v11i8.1522

, , & (2023). Artificial intelligence for decision making in the era of Big Data – evolution, challenges and research agenda. International Journal of Information Management, 48, 63-71. https://doi.org/10.1016/j.ijinfomgt.2019.01.021

, & (2017). An applied guide to research designs: Quantitative, qualitative, and mixed methods. Sage Publications. https://doi.org/10.4135/9781071802779

, & (2023). Artificial intelligence in the Philippine educational context: Circumspection and future inquiries. International Journal of Scientific and Research Publications, 13(5), 704-715. https://doi.org/10.29322/ijsrp.13.05.2023.p13704

, , & (2016). Comparison of convenience sampling and purposive sampling. American Journal of Theoretical and Applied Statistics, 5(1), 1-4. https://doi.org/10.11648/j.ajtas.20160501.11

, , , & (2023, June 14). Navigating the use of ChatGPT in education and research: Impacts and guidelines. OR/MS Tomorrow. https://www.informs.org/Publications/OR-MS-Tomorrow/Navigating-the-Use-of-ChatGPT-in-Education-and-Research-Impacts-and-Guidelines

(2008). Sampling methods for web and email surveys. In N. Fielding, R. M. Lee, & G. Blank (Eds.), the SAGE handbook of online research methods (pp. 195-216). SAGE Publications. https://doi.org/10.4135/9780857020055.n11

, , & (2022). Artificial intelligence in STEM education: A systematic review. Education and Information Technologies, 27(6), 8039-8074. https://doi.org/10.1007/s10639-022-11001-y

, , , & (2022). Exploring an AI-based writing assistant’s impact on English language learners. Computers and Education: Artificial Intelligence, 3, Article 100055. https://doi.org/10.1016/j.caeai.2022.100055

(2016). The importance of selection bias in internet surveys. Open Journal of Statistics, 6(3), 397-404. https://doi.org/10.4236/ojs.2016.63035

(2023). Attention is not all you need: The complicated case of ethically using large language models in healthcare and medicine. eBioMedicine, 90, Article 104512. https://doi.org/10.1016/j.ebiom.2023.104512

, , & (2022). Artificial intelligence in education: Promises and implications for teaching and learning. Center for Curriculum Redesign. https://doi.org/10.58863/20.500.12424/4276068

IBM Corp. (2019). IBM SPSS Statistics for Windows (Version 26.0) [Computer software]. IBM Corp.

(2023). Students’ cognition of artificial intelligence in education. Applied and Computational Engineering, 22(12), 1818. https://doi.org/10.54254/2755-2721/22/20231218

, & (2023). Revolutionizing learning: An exploratory study on the impact of technology-enhanced learning using digital learning platforms and AI tools on the study habits of university students through focus group discussions. International Journal of Research Publication and Reviews, 4(6), 663–672. https://doi.org/10.55248/gengpi.4.623.44407

(2023). ChatGPT is shaping the future of medical writing but still requires human judgment. Radiology, 307(2), Article e230171. https://doi.org/10.1148/radiol.230171

(2023). Analysis of ChatGPT tool to assess the potential of its utility for academic writing in biomedical domain. Biology, Engineering, Medicine and Science Reports, 9(1), 24-30. https://doi.org/10.5530/bems.9.1.5

, , & (2022). Impacts of an AI-based chatbot on college students’ after-class review, academic performance, self-efficacy, learning attitude, and motivation. Educational Technology Research and Development, 70(5), 1843–1865. https://doi.org/10.1007/s11423-022-10142-8

, , , , & (2023). The use of ChatGPT in the digital era: Perspectives on chatbot implementation. Journal of Applied Learning & Teaching, 6(1). https://doi.org/10.37074/jalt.2023.6.1.32

(2023). ChatGPT, an artificial intelligence chatbot, is impacting medical literature. Arthroscopy: The Journal of Arthroscopic & Related Surgery, 39(5), 1121–1122. https://doi.org/10.1016/j.arthro.2023.01.015

, & (2023). From paintbrush to pixel: A review of deep neural networks in AI-generated art. arXiv. https://doi.org/10.48550/ARXIV.2302.10913

(2024). Perceptions of higher education faculty regarding the use of Chat Generative Pre-Trained Transformer (ChatGPT) in education. International Journal on Open and Distance e-Learning, 9(2), 249-265. https://doi.org/10.58887/ijodel.v9i2.249

(2023, April 16). Survey: 32.4 % of college students in Japan say they use ChatGPT. The Asahi Shimbun. https://www.asahi.com/ajw/articles/14927968

(2022, December 7). How ChatGPT could transform higher education. Social Science Space. https://www.socialsciencespace.com/2022/12/how-chatgpt-could-transform-higher-education/

, & (2023). Exploring the potential of using an AI language model for automated essay scoring. SSRN. https://doi.org/10.2139/ssrn.4373111

, , , & (2023). Impact of the implementation of ChatGPT in education: A systematic review. Computers, 12(8), Article 153. https://doi.org/10.3390/computers12080153

(2023, August 24). Can ChatGPT rival university students in academic performance? https://neurosciencenews.com/chatgt-student-writing-23821/

, , , & (2020). Student perceptions of the effectiveness of adaptive courseware for learning. Current Issues in Emerging eLearning, 7(1), Article 5. https://scholarworks.umb.edu/ciee/vol7/iss1/5

, , , & (2021). The evolution of AI-driven educational systems during the COVID-19 pandemic. Sustainability, 13(23), Article 13501. https://doi.org/10.3390/su132313501

Philippine Council for Health Research and Development. (2017). Ethical guidelines for health research in the Philippines. Philippine Council for Health Research and Development.

(2023, January 24). Is ChatGPT a threat to education? UC Riverside News. https://news.ucr.edu/articles/2023/01/24/chatgpt-threat-education

, & (2021). Nursing research: Generating and assessing evidence for nursing practice (11th ed.). Wolters Kluwer.

(2023). ChatGPT in scientific and academic research: Future fears and reassurances. Library Hi Tech News, 40(3), 30–32. https://doi.org/10.1108/LHTN-03-2023-0043

, , , , , & (2023). AI perspectives in education: A BERT-based exploration of informatics students’ attitudes to ChatGPT. In International Conference on Electrical Technology (ICET), (pp. 1-6). IEEE. https://doi.org/10.1109/icet59790.2023.10435040

, , & (2022). Impacts of generative AI on higher education: A comprehensive review. Education and Information Technologies, 27(6), 8303-8332. https://doi.org/10.1007/s10639-022-11139-9

, & (2008). Self-selected sample. In P. J. Lavrakas (Ed.), Encyclopedia of survey research methods (pp. 806-808). Sage Publications.

(2018). The use of Cronbach’s alpha when developing and reporting research instruments in science education. Research in Science Education, 48(6), 1273–1296. https://doi.org/10.1007/s11165-016-9602-2

, , , , & (2023). Impact of ChatGPT on academic performance among Bangladeshi undergraduate students. International Journal of Research In Science & Engineering, 35, 18–28. https://doi.org/10.55529/ijrise.35.18.28

VERBI Software. (2019). MAXQDA 2020 [Computer software]. https://www.verbi.com/maxqda-software/