La evaluación del aprendizaje por competencias en el Grado de Medicina: la propuesta de un nuevo modelo para la UNAN- Managua

Evaluation of competence-based learning in degrees in Medicine: a new UNAN-Managua model proposal

Karla E. Molina-Saavedra

Universidad Nacional Autónoma de Nicaragua

Esther Prieto-Jiménez

Universidad Pablo de Olavide

Guillermo Domínguez-Fernández

Universidad Pablo de Olavide

RESUMEN

El artículo que se presenta recoge los principales resultados obtenidos tras la realización de una tesis doctoral de la primera autora firmante. Bajo el título “Modelo de evaluación del aprendizaje por competencias: Área clínica del grado de Medicina, UNAN- Managua”, su finalidad fue diseñar un modelo de evaluación del aprendizaje por competencias para la carrera de grado de Medicina. Para conseguir dicho objetivo se realizó un estudio mixto, mediante una triangulación múltiple inter-método secuencial (cuali-cuanti-cuali) e intra-método cualitativo de la información recogida. Las principales conclusiones llevaron a identificar que las actuales prácticas evaluativas docentes son básicamente conductistas, se evidenció la variabilidad en las diferentes competencias que son evaluadas a discrecionalidad del docente de las distintas unidades docentes asistenciales y con una escasa variedad de técnicas e instrumentos de evaluación. El proceso de investigación finalizó con una propuesta de evaluación, basado en el modelo CEFIMM (Contexto, Evaluador/Evaluado, Finalidad, Momentos, Metodología) en el que la evaluación es vista con un carácter holístico e incluye la valoración de la fase diferida.

PALABRAS CLAVE

Innovación universitaria; modelo de evaluación; evaluación por competencias; grado de Medicina.

ABSTRACT

The present paper summarises the main results obtained from the first author’s doctoral thesis. Under the title (“Modelo de evaluación del aprendizaje por competencias: Área clínica del grado de Medicina, UNAN- Managua” [Evaluation model of competence-based learning: Clinical area of the Degree in Medicine, UNAN- Managua], the purpose was to design an evaluation model of competence-based learning in the Degree in Medicine. To achieve this objective, a mixed study was conducted, using a multiple sequential inter-method triangulation (qual-quant-qual) and intra-qualitative method of the information collected. The main conclusions were first, that current teaching evaluation practices were basically behavioural. Second, there was evidence that different competences were evaluated differently, at the discretion of the teacher of the different training support units. And third, the range of evaluation techniques and instruments was reduced. The research process led to a final evaluation proposal, based on the CEFIMM model (Context, Evaluator/Evaluated, Purpose, Moments, Methodology) in which the evaluation was approached from a comprehensive viewpoint and included the evaluation of the deferred phase.

KEYWORDS

University innovation; evaluation model; competence-based evaluation; degree in Medicine.

1. Introduction.

Contemporary society demands that universities educate and train integral individuals, capable of solving problems in their different areas of work and demonstrating social responsibility (Crespí & García-Ramos, 2021). This has led higher education institutions to modify their teaching and evaluation methods, in order to encourage the application and merging of knowledge when solving situations in context. That is, they target the development of competences.

Martínez and Echeverría (2009) consider that competency-based training requires teachers to focus on “learning to learn” (p.133). The latter, however, implies making changes to the curriculum, to teaching and evaluation strategies, to teachers’ roles in the promotion of self-learning, and the ability to transfer knowledge to real contexts. Thus, students will be better able to face the problems in their professional domains. Indeed, the development of competences requires the implementation of teaching methods that allow a formative assessment, i.e., one that fosters students’ individual and collective progress.

Competence-based assessment offers numerous benefits in university education. It covers a range of interrelated aspects that help to train future professionals (Hernández-Marín, 2018). Casanova et al. (2018) reinforce this idea, as they specify that “it is not enough to know that or to know how, it is also necessary to integrate this knowledge with positive attitudes, understood as an individual’s potential capacity to efficiently execute a series of similar actions” (p.116)

Although evaluation is essential in the teaching-learning process, it is often poorly accepted by those involved and erroneously applied by teachers. Indeed, evaluation is commonly associated with the detection of errors or the application of penalties or corrective measures which are not always welcomed by those evaluated (Zúñiga et al., 2014). In these cases, students do not embrace this type of evaluation because they do not usually receive adequate feedback on their weaknesses. This reduces evaluation to mere measurement, inherent only to summative evaluation and not to formative assessment.

Therefore, clearly, it is important to use an evaluation model that: is valid, reliable and feasible; facilitates students’ training and learning; is considered fair by those involved; and is based on evaluation instruments and techniques with explicit assessment indicators, avoiding inter-observer variability (Cañedo, 2008; Carreras et al. 2009; Cabero-Almenara et al., 2021).

In 2012, the National Autonomous University of Nicaragua, Managua, UNAN – Managua conducted a curricular transformation that involved all faculties, including the Medical Sciences. The university proposed a shift from an essentially behaviourist paradigm of goal-based learning to a constructivist approach, according to the Curriculum approved on 6 December 2012. In 2017, the higher authorities decided to launch the curricular innovation process towards competency-based education. The adventure began in three degrees at the faculties of Engineering, Economic Sciences and Medical Sciences. It was not until 2019, however, that all 76 UNAN Managua degrees launched their competency-based curricular transformation. The need thus arose to define a learning evaluation model for the degree in Medicine that addressed the competences described in the new curriculum.

The consensual design of the evaluation model that best met the needs and characteristics of the context would serve to carry out an adequate evaluation of students’ different areas of knowledge. Therefore, it should allow self-evaluation and co-evaluation, facilitate timely student feedback and the identification of learning gaps, etc. The learning process had to be significant so that students could integrate knowledge and experiences that helped them to solve problems in their professional life in the most successful way possible (Hincapié & Clemenza, 2022). For this reason, we proposed the objective of designing a model of evaluation of competence-based learning for the degree in Medicine at the UNAN-Managua university.

2. Material and methods.

A mixed methodology was used in the study, and a multiple triangulation was performed. The data triangulation was conducted with the person subtype based on an interactive analysis, interviewing teachers and students in their fourth, fifth and sixth year of the Degree in Medicine of the Faculty of Medical Sciences of the UNAN-Managua university in the year 2018. A sequential qual-quant-qual inter-method and intra-qualitative method triangulation was also developed using semi-structured interviews, discussion groups and the Delphi method (Sánchez-Taraza & Ferrández-Berrueco, 2022; Varela & García, 2012).

The fieldwork unfolded over three research phases (Deslauriers, 2004). In the first and third phases, a qualitative research process was conducted, in which the current assessment practices and the essential dimensions of the competence-based assessment in the Degree in Medicine were determined, respectively. In the second phase, a quantitative process was performed, in which improvements to teacher evaluation practices were determined.

A sample of experts was used in the first phase. The number of experts was determined via the saturation of categories and the sampling was non-probabilistic (Hernández et al., 2010). The inclusion criteria defined were as follow: active teachers and students in their fourth, fifth, and sixth year of the degree; and professors had taught over the last two academic semesters. Those academic years were selected because they corresponded to the professional practice areas in the degree, in which students are exposed to different scenarios to develop their medical skills.

A semi-structured interview was conducted with 33 teachers and 96 students in the fourth, fifth, and sixth year of the Degree in Medicine of the year 2018. Once the teachers’ evaluation practices were identified, the elements of improvement to their evaluation practices were determined, and the content analysis of 129 data collection guides was conducted.

The interview script was validated by eight experts (three focused on the methodological perspective and five on the subject to be investigated). The target group was also validated, applying the instrument to 16 teachers and 50 students, representing 10 % of the populations under study. Once the information was processed, it was returned to the subjects interviewed in order to validate the data. After realising the semi-structured interviews, two discussion groups were conducted: one with teachers and one with students, all in their fourth, fifth, and sixth years, with six participants per group, two from each year, respectively.

In the second phase, the components of the essential evaluation dimensions were assessed using a quantitative methodology and the content analysis technique. The sample was composed of 129 forms. In this case, the universe was the sample of this phase of the study and the sampling was non-probabilistic. The data collection guide used in this phase was validated by eight experts, of which three focused on methodological aspects and five on the subject under study in order to assess the clarity, understanding, coherence and relevance of the questions. This led to adjustments. A statistical reliability analysis was also performed, resulting in a Cronbach’s Alpha quotient of 0.72, considered as average–acceptable (Aybar et al., 2018).

In the last qualitative phase, the Delphi Method was applied (García & Suárez, 2013) requesting a group of academics (6 medical specialists in the clinical area, 6 in the basic care area and 5 in the area of public health) to participate in an expert panel (Yañez & Cuadra, 2008). The inclusion criteria were as follow: higher education teacher; with at least five years of experience; and his or her activity had to be linked to the training of health professionals. The exclusion criterion was not having taught medical students over the last five years. The purpose was to validate the research process and the proposed evaluation model, asking for their opinion on the study design, and on the coherence between the theoretical framework, objectives and hypotheses raised and the position assumed.

In the case of qualitative results, the teachers’ expressions were identified with “D” and the participant number, and that of the students with “E” and the participant number. Qualitative data were analysed using Atlas.ti-7, by coding text for the analysis of information and quantitative data with the SPSS version 18 programme, applying central tendency measures.

3. Results.

Through the different information collection techniques and instruments, we identified that the most widely used evaluation methodology was mixed, with 80.6 %, followed by the quantitative and qualitative methodology, with 15.5 % and 3.9 %, respectively. When asking the teachers to briefly describe the evaluation methodology they used, the methodology was mixed with the purpose of the evaluation being described as follows: “The evaluation methodology used in the practical learning activities was based on 3 important aspects: diagnostic evaluation, at the beginning of the year, to evaluate the students’ knowledge, skills and activities; formative assessment at the different stages; and summative evaluation, based on an instrument designed according to three evaluation modes (theoretical, oral and report)” (D 109).

They also detailed evaluation techniques or instruments such as the description of the methodology: “Peer evaluation, questionnaires with open-ended questions, questions and answers” (D 102). The teaching strategies “Brainstorming, 5 essential questions” (D 98) or evaluation objects were also recorded: “Prior assessment of knowledge and skills guided by practical activity guides” (D 101). Regarding the consequences of the fact that teachers were unclear about their evaluation methodology, they said “I believe that being unclear about evaluation has an impact on students’ training, because if we are not explicit about how we are going to evaluate, the competence will not be developed” (D3) or... “that the mark will not be objective, because they are evaluating me using a system that they do not even know themselves” (E1). Regarding the purpose of the evaluation, 100 % considered that it was conducted for diagnostic purposes, 99 % for formative ones and 98 % towards a summative end. Regarding the moment of evaluation, the continuous and final evaluation were commonly conducted by 99 % and 90 %, respectively; initial assessment, however, was conducted on fewer occasions (84 %).

With respect to the definition process of the criteria and evaluation elements, students’ non-participation predominated. Indeed, the activity was essentially executed by the authorities and the teacher. Commenting on the impact of their non-participation in the definition of the evaluation criteria, they stated: “ The teacher is supposed to be the expert, the one who should know what level the student should reach, the student does not have that broad vision of what they should study...” (D4); “It’s no problem for me that teachers establish the criteria. What I do feel is a problem is that all teachers, despite being part of the same university, of the same faculty, have different criteria... “ (E4).

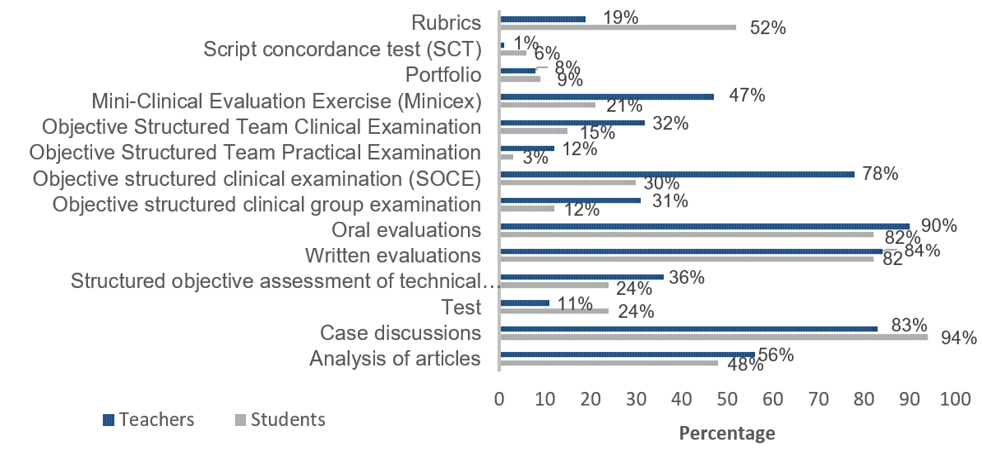

In relation to the object of the evaluation and the techniques and instruments, regarding “knowing that”, the most evaluated subjects were the Clinical Sciences, followed by Critical Thinking. The least evaluated were the Basic and Humanistic Sciences. They were evaluated using the following techniques and instruments (graph 1).

Figure 1. Techniques and instruments for the evaluation of “knowing that”

Continuing with the impact on learning and the fact that the students interviewed wished the evaluations to be dynamic, participatory, objective, systematic, integrating knowledge, they said: “The main repercussion or impact is that we are creating doctors, bad general practitioners, bad future specialists, bad future residents...” (E6).

Regarding the “knowing how”, the evaluated aspects were, in descending order:

1.Establishes the diagnostic foundations of prevalent pathologies.

2.Identifies and respects cultural and personal factors that affect communication and medical management.

3.Performs the anamnesis, describing all the relevant information.

4.Examines the subject’s physical and mental state, using basic exploration techniques.

5.Identifies and interprets the most common complementary tests of prevalent pathologies.

6.Establishes the foundations of a good doctor-patient relationship by listening and providing clear explanations to the patient and/or family members.

7.Clearly records medical information using appropriate technical language.

8.Applies and respects institutional regulations and biosafety standards.

9.Communicates with other members of the health team in a respectful and assertive manner

10.Critically reads clinical studies.

11.Proposes appropriate preventive and rehabilitation measures in each clinical situation.

12.Establishes the bases of therapeutic management (pharmacological and non-pharmacological) and prognosis of prevalent pathologies based on the best possible information.

13.Executes the diagnostic procedures of the prevalent pathologies under supervision.

14.Uses information and communication technologies (ICT).

15.Recognises the severity criteria and establishes the therapeutic fundamentals of the situations that put the patient’s life at risk.

16.Recognises the cases that need to be transferred.

17.Fills out written informed consent under supervision, giving a clear and non-technical explanation to the patient together with possible complications.

18.Designs and executes an elementary scientific project that affects the health of the community

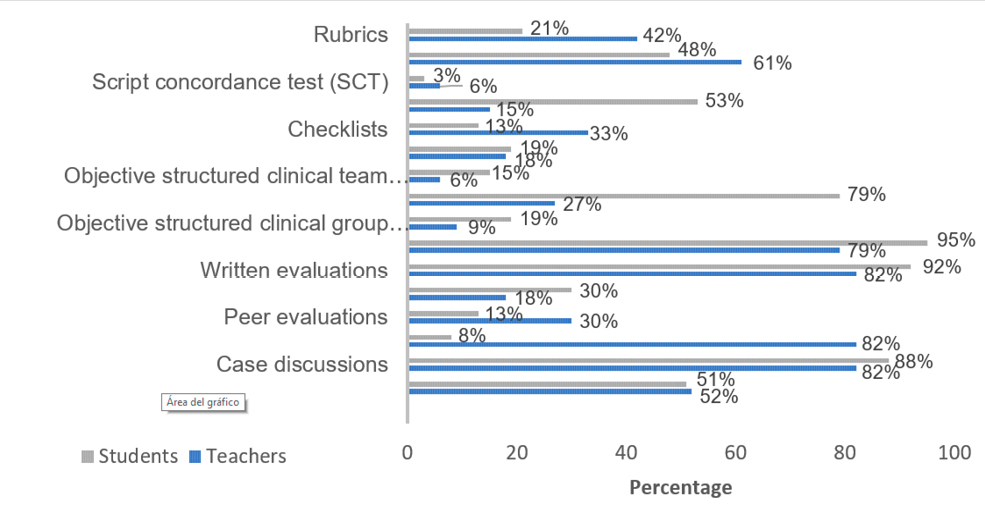

Such knowledge was evaluated using the techniques and instruments below.

Figure 2. Techniques and instruments for evaluating the “knowing how”

With respect to the “knowing to be”, most interviewees agreed that the following knowledge areas were evaluated:

1.Demonstrates professional values of responsibility, empathy, honesty, integrity, punctuality.

2. Respects the patient, the other members of the health team, and the community.

3.Demonstrates teamwork skills.

4.Demonstrates a commitment to learning.

5. Acts ethically, respecting the legal norms of the profession.

6. Promotes health and disease prevention in patients.

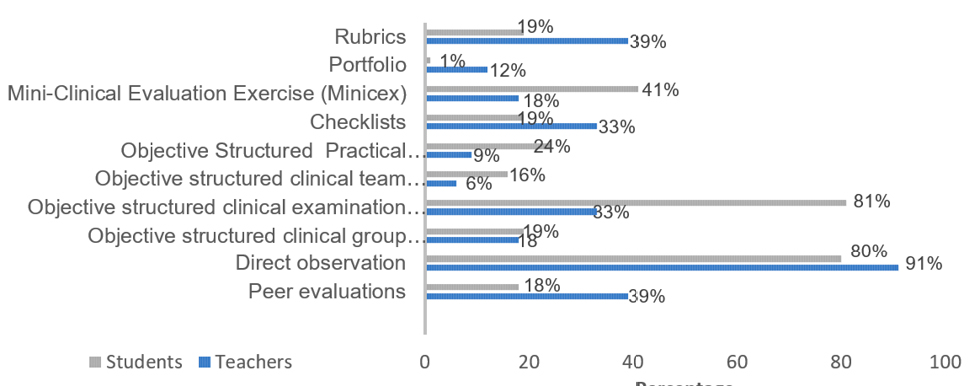

Such knowledge was evaluated using the techniques and instruments below.

Figure 3. Techniques and instruments for the evaluation of “knowing to be”

When asked how they wished to be evaluated, answers were given such as: “With the examples they give us, not many doctors have a good attitude in the professional field” (E 25).

In relation to “knowing how to transfer”, the interviewees stated that this knowledge was evaluated based on the following aspects (in descending order):

1.Promotes health and disease prevention in patients.

2.Recognises factors that influence health (lifestyles, environmental, cultural, etc.).

3.Uses epidemiological data that affect the patient’s health.

4.Uses resources for the greatest benefit of patients.

5.Employs health management principles.

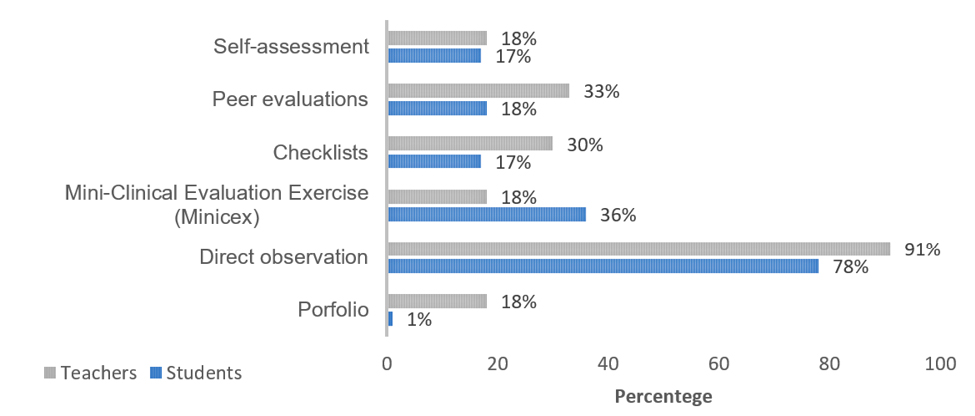

Such knowledge was evaluated using the techniques and instruments below.

Figure 4. Techniques and instruments of evaluation of “knowing how to transfer”

When asked about the consequences of the fact that some “knowledge areas” were not evaluated during the student’s training, the participants stated: “... different graduates are trained” (D5); “... we complete the degree with weaknesses in certain subjects, that is, the students are not all at a uniform level when they’ve finished their studies...” (E4).

In relation to the impact on student evaluation, and the fact that teachers do not know the existing evaluation techniques and instruments, which are better suited to the knowledge to be valued, they said: “If teachers are not clear about how to evaluate the competences, then the impact will be on the quality of the resources we are forming...” (D3); “The evaluation is not objective, it will never be objective because there isn’t even any criteria” (E1).

4. Discussion.

The conception of the evaluation was analysed in the light of various coexisting trends and methods: the behaviourist, rational scientific perspective; the humanistic and cognitivist approach; and the socio-political and critical viewpoint (Domínguez, 2000), which define the object of evaluation, the curriculum definition (Linderan & Lipsett, 2016) and the method through which evaluation evidence is sought.

In the case of current evaluation practices, the participants in the study stated that the most widely used methodology was the mixed methodology, in which they resorted to both quantitative and qualitative methods (87.9 % teachers and 78.1 % students). However, when asked to describe the methodology, a mixture of concepts corresponding to the different dimensions of the evaluation was identified, in which aspects such as purpose, techniques, instruments, evaluation objects, or teaching strategies, etc. were defined, instead of the methodology used. This created some confusion when applying it in evaluation practice, which can become a source of conflict and discontent between students and teachers (Guzmán, 2006).

This data was highlighted by most teachers and students participating in the focus groups, for whom ignorance of these aspects brought about negative training consequences, such as subjective and erroneous evaluations.

In relation to the purpose, most participants pointed out the three purposes of the evaluation: diagnostic, formative and summative. However, when asked about the moment of the evaluations, there was evidence that the initial diagnostic evaluation was not conducted regularly (88 %).

As for the evaluation elements and criteria, the students’ absence of intervention predominated, as it was essentially executed by the authorities and teachers, some teachers stressing that students’ participation was not necessary: “The teacher is supposed to be the expert, the one who should know what level the student should reach, the student does not have that broad vision of what they should study...” (D4). On the other hand, in the case of the students, it was even more striking that in addition to not participating in the definition of the criteria, they indicated that the learning evaluation criteria varied across the different training support units. This implied that even when teachers stated that the authorities defined the evaluation criteria, either the latter were not uniform, or they changed at the teacher’s discretion, with the risk of inter-observer variation or a lack of objectivity in the evaluations. Additionally, the evaluation currently applied responds to a model of verification of achievements, that is, a Tyler model (Asensio, 2007).

The “knowing that” was based on the specific competences defined in Latin America, Spain and North America to obtain a Degree in Medicine (Domínguez & Hermosilla, 2010; Abreu et al., 2008; Association of American Medical Colleges, 2008; General Medical Council, 2018). The most evaluated subjects were Clinical Sciences and Critical Thinking, while Basic and Humanistic Sciences were among the least assessed. That is, they focused on the professional areas of the degree, in which the health-disease cycle was addressed, leaving out humanistic and basic training aspects. The latter, however, are those that provide the essential principles of the profession. Regarding the techniques and evaluation instruments recommended to assess this knowledge, the most widely used were case discussions (94 %), oral evaluations (82 %), and written evaluations (82 %). Script concordance tests (6 %), portfolios (9 %) and structured objective clinical team examinations (3 %) were rare or non-existent. This means that a reduced range of instruments and evaluation techniques were used to evaluate the learning of “knowing that” – leaving aside the issue of their complementarity.

With respect to “knowing how” (Flores et al., 2012; Reta et al., 2006), we found that the least evaluated elements were: the design and execution of an elementary scientific project; the recognition of the cases that deserve to be transferred; and the filling of the informed consent. Thus, although these skills are regionally and internationally considered as specific and necessary competences to graduate as a doctor, they are not taught, and therefore are not evaluated. In this way, medical students can qualify as doctors without any evidence that they have acquired the skills required to practice professionally. We also found that among the recommended techniques and instruments (Allende et al., 2018), oral evaluations (95 %), written evaluations (92 %) and case discussions (88 %) were the most widespread. The script concordance test (3 %), the structured objective clinical team examination (15 %), the peer evaluation (30 %), and the objective structured clinical group examination (18 %) were implemented the least. This demonstrates the limited effects of the complementarity of the triangulation of techniques and instruments that must be used during the evaluation (Tejada, 2015; Ruiz, 2001).

As for the “knowing how” (Flores, 2012; Abreu et al.; 2008; Reta et al. 2006), the most evaluated competences were the professional values of responsibility, empathy, honesty, integrity, punctuality and respect for the patient, the other members of the health team and the community, followed by the ability to work as a team and commitment to learning. The most widely used techniques and instruments (Wass et al., 2001) included direct observation (91 %) and structured objective clinical examination (81 %). The least used or not used at all were: portfolios (12 %), structured objective clinical team examination (6 %) and objective structured clinical group examination (9 %).

Finally, regarding the “knowing how to transfer” (Aguirre, et al., 2013; Morán, 2016), direct observation was the most widespread among the recommended techniques and instruments. The least used included: the portfolio (18 %), the mini-exercise of clinical evaluation (18 %) and self-evaluation (18 %). This shows that both “knowing to be” and “knowing how to transfer” were basically valued by direct observation (91 %) and lacked an evaluation instrument.

5. Conclusions and proposal of the Innovation and Pedagogical Quality Model.

The current assessment practices applied by professors of the Degree in Medicine at the Faculty of Medical Sciences of the UNAN-Managua university can essentially be described as Tyler-behaviourist (Domínguez, 2000; Tejada 2017). It should be noted, however, that even when the implemented programme of study is based on objectives, the various Degree in Medicine competences are unevenly evaluated. This issue should be addressed in future curricular improvement initiatives.

An absence of diagnostic evaluation means that teachers are unaware of students’ prior experience or knowledge regarding the subject to be studied (Martínez et al., 2017). Therefore, they have no elements at their disposal to judge and adjust the contents they teach, their teaching strategies and the evaluation criteria. This latter fact exposes how health professionals who exercise teaching functions concentrate on the evaluation of learning, failing to consider assessment as a support for learning (Earl, 2013).

On the other hand, the evaluation was based on obtaining evidence of the achievements of the specific teaching objectives, emphasizing the data collection used for objective tests and predetermined observation. The latter leads to the risk of non-comprehensive evaluations in which diagnostic evaluation is not frequently practiced, implying an ignorance of the students’ sociocultural characteristics, knowledge and previous experiences. This limits the ability to make adjustments to the teaching process based on these particularities.

The definition of the criteria and evaluation elements were mainly established by the authorities and teachers, with little participation of students. Moreover, these criteria and elements of evaluation were not uniform and varied according to the teachers. Yet evaluations should not appear to be surprising or whimsical teacher initiatives: students must control their own learning and to this end, the objectives and evaluation criteria must be clearly defined and understood by students (Arribas, 2017).

Moreover, variations in terms of the object of evaluation, a pillar of the behaviourist conception, were striking: not all specific competences were evaluated in a uniform way, implying that not all students developed the same, necessary competences to practice professionally.

There was a limited diversity of techniques to evaluate the “knowing that” (Nolla, 2014), and untypical instruments for the medical sciences were applied. This also occurred in the cases of the “knowing how”, “knowing to be” and “knowing how to transfer”, which are used in current evaluation practices, overlooking all recommendations relating to the triangulation and complementarity of these techniques and instruments (Champin, 2014; Liderman et al., 2016; Arribas, 2017).

In future studies, it would be highly relevant to include graduates who could contribute to improving the evaluation of competences derived from the “knowing how to unlearn”, as they involve skills that help to address different problematic situations faced in professional practice (Domínguez-Fernández et al., 2017).

The CEFIMM evaluation model (Context, Evaluator/Evaluated, Purpose, Moments, Methodology) was thus designed to address the issues above. The essential evaluation dimensions based on the CEFIMM model are described in the table below.

Table 1. Basic dimensions of the CEFIMM model.

|

Objective of the evaluation: |

Execute a holistic assessment that promotes learning and is not limited to verifying achievements. |

|

Participants |

Evaluator and evaluated working in a coordinated manner, systematic feedback playing a predominant role. |

|

Object |

The different evaluation dimensions defined in the curricular document, concretised in the achievement indicators of the different competences to be achieved. |

|

Methodology |

Mixed, using a qualitative and quantitative methodology considering the context in which the evaluation process is developed. |

|

Purpose |

Diagnostic, formative, summative, during their professional training process. |

|

Moment |

Initial, continuous, final and deferred. Must be conducted systematically. The results and impact must be evaluated in the deferred evaluation. |

|

Techniques and instruments |

Both qualitative and quantitative and using an extensive variety, to ensure their complementarity and triangulation. |

The CEFIMM model fosters comprehensive evaluation (Domínguez-Fernández et al., 2019), leading those involved to regard the assessment system as fair. Indeed, an emphasis is placed on agreeing on the evaluation criteria and instruments. In addition, thanks to the diversity and complementarity of techniques and instruments, possible separate limitations are corrected by their variety. Lastly, the evaluation indicators are clearly stated, thus preventing inter-observer variability.

We believe that the presented model facilitates timely student feedback, self-evaluation and co-evaluation as well as the identification of learning gaps. Moreover, the assessment system adapts to the needs and characteristics of the context, as it can be adjusted to the students’ different levels or areas of knowledge. The model therefore contributes significantly to the improvement of competence-based assessment of future doctors.

6. Funding.

This study was conducted within the framework of the Doctoral Programme in Management and Quality of Education of the Faculty of Education and Languages of the UNAN-Managua, resulting in the doctoral thesis of the first author of this publication, directed by the two other authors of the article.

REFERENCES

; ; ; ; ; (2008). Perfil por competencias del médico de grado mexicano. México DF: Asociación Mexicana de Facultades y Escuelas de Medicina, A.C.

& (2013). Resultados de aprendizaje. Política de equiparación de competencias de la carrera a resultados de aprendizaje (OCM.0002). http://www.udlaquito.com/pdf/plancurricular/Perfil%20de%20Egreso/Documento%20del%20Perfil%20de%20Egreso%20de%20la%20carrera/MedicinaPerfi_RDA_ERquiparacio%CC%81n.pdf

; ; (2018). Propuesta de un examen clínico objetivo estructurado como evaluación final de competencias de egreso en la carrera de tecnología médica. Educación Médica, 20(2), 1-6. DOI https://doi.org/10.1016/j.edumed.2017.12.008

(2017). La evaluación de los aprendizajes. Problemas y soluciones. Revista de Currículum y Formación de Profesorado, 21(4), 381-404. DOI: http://www.redalyc.org/pdf/567/56754639020.pdf

(2007). Modelos de evaluación. Revista Universitara de Sociologie, 4(2): 40-70. DOI: https://cis01.central.ucv.ro/revistadesociologie/files/rus-2-2007.pdf#page=40

(2008). Recommendation for Preclership clinical skills Education for Undergraduate Medical Education. Task force on the clinical Skills Education of Medical Students. https://www.aamc.org/download/130608/data/clinicalskills_oct09.qxd.pdf.pdf

; ; ; ; y (2018). Evaluación de los contenidos de Ingeniería de Mantenimiento en la formación del Ingeniero Eléctrico en el Instituto Tecnológico de Santo Domingo. International Journal of Educational Research and Innovation (IJERI), 10, 108-125. https://www.upo.es/revistas/index.php/IJERI/article/view/3468

; ; ; (2021). La innovación en el aula universitaria a través de la realidad aumentada. Análisis desde la perspectiva del estudiantado español y latinoamericano. Revista Electrónica Educare, 25(3), 1-17.

& (2008). Fundamentos teóricos para la implementación de la didáctica en el proceso de enseñanza - aprendizaje. Cienfuegos, Cuba: Eumed.

et al. (2009). Guía para la evaluación de competencias en Medicina. España: Agencia per a la Qualitat del Sistema Universitari de Catalunya.

; ;; (2018). Visión general del enfoque por competencias en Latinoamérica. Revista de Ciencias Sociales, 24(4), 114-125.

(2014). Evaluación por competencias en la educación médica. Rev Peru Med Exp Salud Pública, 31(3), 566-571. DOI: http://www.scielo.org.pe/pdf/rins/v31n3/a23v31n3.pdf

& (2021). Competencias genéricas en la universidad. Evaluación de un programa formativo. Educación XX1, 24(1), 297-327. DOI: http://doi.org/10.5944/educXX1.26846

(2004). Investigación cualitativa. Guía práctica. http://repositorio.utp.edu.co/dspace/bitstream/handle/11059/3365/Investigaci%C3 %B3n%20cualitativa.%20pdf.PDF?sequence=4&isAllowed=y

& (2010). Propuesta de planificación y reflexión sobre el trabajo docente para el desarrollo de competencias en el EEES. XXI Revista de Educación, 12, 63–80.DOI http://uhu.es/publicaciones/ojs/index.php/xxi/article/view/1126/1759

; ; (2017). Análisis del desarrollo de competencias en la formación inicial del profesorado de Educación Superior en España. Notadum 44-45; 69 – 88. DOI: http://dx.doi.org/10.4025/notandum.44.7

(2000). Concepciones de la evaluación. En G. Domínguez (Ed.). Evaluación y educación: Modelos y propuestas. 21-62. http://cvonline.uaeh.edu.mx/Cursos/Maestria/MTE/EvaluacionAprendizajeEV/Unidad%201/eval_edu.pdf

; ; (2019). Las situaciones reales del docente como estrategia de aprendizaje inicial del profesorado de secundaria: el Modelo SIRECA. Profesorado, 23(3), 1-21.

(2013). Assessment of Learning, for Learning, and as Learning. En Autor (Ed.), Assessment as Learning. Using classroom assessment to Maximize student learning; 2013, 2do Ed.: 25-34. DOI: https://books.google.com.ni/books?hl=es&lr=&id=MlPGImQEh4MC&oi=fnd&pg=PP1&dq=concept+of+educational+assessment&ots=SLbKawaF7N&sig=fT5bgn2cBnEqIPemtqtj_q7p-hw&redir_esc=y#v=onepage&q&f=false

; ; (2012). Evaluación del aprendizaje en la educación médica. Revista de la Facultad de Medicina de la UNAM, 55(3), 42- 48. http://www.medigraphic.com/pdfs/facmed/un-2012/un123h.pdf

& (2013). El método Delphi para la consulta a expertos en la investigación científica. Revista Cubana de Salud Pública, 39(2), 253-267. http://scielo.sld.cu/pdf/rcsp/v39n2/spu07213.pdf

(2018). Outcomes for graduates. 1-28. DOI: https://www.gmc-uk.org/-/media/documents/outcomes-for-graduates-a4-5_pdf-78071845.pdf

Aproximación a la evaluación del aprendizaje. Gaceta médica, 29, 63-69. DOI: http://www.scielo.org.bo/pdf/gmb/v29n1/a12.pdf

; ; (2010). Metodología de la investigación. México: Mc Graw Hill Educación.

(2018). El currículo universitario por el enfoque de competencia, su pertenencia y nivel de exigencia en el contexto nacional y local. International Journal of Educational Research and Innovation (IJERI), 9, 1-15. https://www.upo.es/revistas/index.php/IJERI/article/view/2423

& (2022). Evaluación de los aprendizajes por competencias: Una mirada teórica desde el contexto colombiano. Revista de Ciencias Sociales (Ve), XXVIII(1),106-122.

& (2016). Evaluation and feedback. En P, Thomas, D., Kern & M., Hughes (Eds), Curriculum development for medical education. Ed.,122- 164.

; ; ; ; ; ;; ; ; ; (2017). Evaluación diagnóstica y formativa de competencias en estudiantes de medicina a su ingreso al internado médico de pregrado. Gaceta médica de México, 153, 6-15. DOI: http://www.anmm.org.mx/GMM/2017/n1/GMM_153_2017_1_006-015.pdf

& (2009). Formación basada en competencias. Revista de Investigación Educativa, 27(1): 125-147. DOI: http://www.researchgate.net/profile/Benito_Echeverria_Samanes/publication/263572564_Formacin_basada_en_competencias/links/02e7e53b41edf2f8e2000000.pdf

(2016). La evaluación del desempeño o de las competencias en la práctica clínica. 2 a, parte: tipos de formularios, diseño, errores en su uso, principios y planificación de la evaluación. Educación médica, 18(1), 2-12. DOI: http://dx.doi.org/10.1016/j.edumed.2016.09.003

& (2014). Instrumentos de evaluación y sus características. En J., Núñes, J. Palés, R. Rigual (Dir.), Guía para la evaluación de la práctica clínica en las facultades de Medicina. Instrumentos de evaluación e indicaciones de uso, pp.33-44. DOI: http://www.sedem.org/resources/guia-evaluacion-cem-fl_e_book.pdf

; ; ; ; (2006). Competencias médicas y su evaluación al egreso de la carrera de medicina en la Universidad Nacional de Cuyo (Argentina). Educación Médica, 9(2), 75-83. DOI: http://files.sld.cu/reveducmedica/files/2010/10/competencias-med-y-su-eval-al-egreso.pdf

& (2022). Aplicación del método Delphi en el diseño de un marco para el219aprendizaje por competencias. Revista de Investigación Educativa, 40(1), 219-235.

; ; (2012). Descripción y usos del método Delphi en investigaciones del área de la salud. Investigación en Educación Médica, 1(2), p. 90-95. http://riem.facmed.unam.mx/sites/all/archivos/V1Num02/07_MI_DESCRIPCION_Y_USOS.PDF

; ; ; (2001). . The Lancet, 357, 945-949. http://www.ceesvandervleuten.com/application/files/2314/2867/6321/Assessment_of_clinical_competence.PDF

& (2008). La técnica Delphi y la investigación en los servicios de salud. Ciencia y Enfermería XIV (1), 9-15. http://www.scielo.cl/pdf/cienf/v14n1/art02.pdf

; ; ; ; (2014). Evaluación del aprendizaje: Un acercamiento en Educación superior. En CINDA, Evaluación del aprendizaje en innovaciones curriculares de la Educación superior. Chile: Copygraph. http://www.cinda.cl/download/libros/2014 %20-%20Evaluaci%C3 %B3n%20de%20los%20aprendizajes.pdf