Comparativa de indicadores de supervisión de calidad en la formación no presencial

Comparison of quality supervision indicators in remote training

Nuria Falla-Falcón

University of Pablo de Olavide

Eloy López-Meneses

University of Pablo de Olavide

Miguel Baldomero Ramírez-Fernández

University of Pablo de Olavide

Samuel Crespo-Ramos

University of Pablo de Olavide

RESUMEN

Esta investigación analiza la comparativa de indicadores de supervisión de calidad en la formación no presencial debida al escenario de la docencia virtual sobrevenida originada por el COVID-19, a través de los instrumentos EduTOOL@ y SulodiTOOL®. Surge como línea de Investigación de la Cátedra de Educación y Tecnologías Emergentes, Gamificación e Inteligencia Artificial de la Universidad Pablo de Olavide (Sevilla). Esta comparativa arroja que hay dimensiones, subfactores e indicadores que son comunes a las dos herramientas, con una diferencia de ponderaciones del 30 % superior en el primer instrumento, y viceversa, es decir, indicadores que no son comunes a ambas, con una ponderación del 30 % superior en el segundo instrumento. En esta línea, la investigación realiza un análisis gráfico de áreas de importancia de las dimensiones e indicadores de ambas herramientas, creadas por las ponderaciones de dichos indicadores.

PALABRAS CLAVE

Formación; Calidad de la Educación; Supervisión Educativa; Enseñanza no presencial; Entornos de Aprendizaje Virtuales.

ABSTRACT

This research analyzes the comparison of quality supervision indicators in non-face-to-face training due to the scenario of virtual teaching caused by COVID-19, through the EduTOOL@ and SulodiTOOL® instruments. It arises as a research line of the Chair of Education and Emerging Technologies, Gamification and Artificial Intelligence of the Pablo de Olavide University (Seville). This comparison reveals that there are dimensions, subfactors and indicators that are common to both tools, with a weighting difference of 30 % higher in the first instrument, and vice versa, that is, indicators that are not common to both, with a weighting of 30 % higher in the second instrument. In this line, the research performs a graphic analysis of areas of importance of the dimensions and indicators of both tools, created by the weightings of said indicators.

KEYWORDS

Training; Quality of Education; Educational Supervision; Non-face-to-face Teaching; Virtual Learning Environments.

1. Introduction.

Quality in education is a concept full of difficulties and it is necessary to define it, as well as characterize what is considered good learning (Conole, 2013), especially given the new scenario marked by COVID-19. Therefore, it must be taken into account that when using an e-learning evaluation instrument not explicitly referred to MOOCs (Arias, 2007), they share common features with online courses.

The MOOC movement is undoubtedly a milestone in 21st century education and has led to a revolution in the continuous training model (Vázquez & López, 2014). Information and communication technologies have revolutionized the world as it was known before its use and applicability to daily life. This use has also moved into the educational field and has transformed the way we learn and teach today. In the midst of this panorama, massive open online courses emerge as an opportunity available for everyone to learn, which has caused many changes in the educational field. Among the main advantages are its free nature, the establishment of collaboration networks and flexible hours, while among the disadvantages we can highlight abandonment, that some courses are not adapted or available for easy devices or lack of tracking. The main idea is to encourage the advantages of virtual training, given the great benefits it brings to education; and, in turn, try to resolve the disadvantages that have been seen to lead to the use of MOOCs, in order to promote the effectiveness of their use (Vázquez-Cano, López-Meneses, Gómez-Galán & Parra-González, 2021).

The foundation of MOOCs is connectivism, an epistemological system that provides ideas about how certain learning phenomena occur between connected students, but which lacks the nature and structure of a theory (Zapata-Ros, 2013). For this reason, among the disadvantages is the lack of harmonization of studies that provides a holistic vision of the aggregation of indicators to improve student participation in MOOCs. In fact, the coronavirus pandemic has accelerated the adoption of MOOCs, and student participation has become even more essential to the success of this educational innovation. Therefore,

In this way, quality is an emerging field for researchers concerned with qualitatively and quantitatively measuring this type of virtual training. Thus, the studies focus on being able to calmly evaluate what these courses offer in terms of their pedagogical value in the field of training through the Internet and, more importantly, how they can be improved in this sense (Aguaded, 2013; Guàrdia, Maina & Sangrà, 2013). Along these lines, it does not seem so evident that MOOCs offer quality training (Martín, González & García, 2013) and it would be necessary to improve them if they are to be a disruptive milestone (Roig, Mengual-Andrés & Suárez, 2014).

Thus, the so-called t-MOOCs tend to rely on the completion of tasks by the student. The presence of technologies in educational tasks means that the skills that teachers must possess are broader than the mere mastery of content and teaching methodologies, which is why it is necessary to emphasize the development of teaching digital competence (Cabero- Almenara & Romero-Tena, 2020).

In this sense, of the standards and consortia developed for the quality of virtual courses (Hilera & Hoya, 2010), this research has chosen to analyze the indicators of two tools for quality supervision in non-face-to-face training: EduTOOL@ and SulodiTOOL@. Its main contribution in the field of assessment instruments for non-face-to-face training will be the approach that can be configured for the supervision of teaching digital competence, as a key aspect in the advantages of virtual environments that are occurring in the educational world.

2. Theoretical framework.

2.1. Teaching digital competence as a key aspect of supervision.

In virtual environments, it is necessary to determine some basic ICT competencies for teachers, among which communication skills are essential. The most common denominators attributed to the new role of the teacher in the digital era are: organizer, guide, generator, companion, coaching, learning manager, counselor, facilitator, tutor, facilitator or advisor (Viñals & Cuenca, 2016).

According to Gordon (2022), technical competencies include:

•The teacher requires the necessary skills to use educational hardware and software for virtual education.

•Know the appropriate pedagogy-didactics to teach with ICT and virtually. This implies that you choose the most appropriate tool according to the objective for which it was designed, not just the didactic one.

•Media competencies that include critically selecting the media, content and communication forms in the learning process with educational, human and social criteria (Roldán, 2005).

•Lifelong learning skills. It means that you must stay up to date with new technologies that are developed and can be integrated into teaching.

•Educational design skills. This competence includes correctly developing teaching materials and activities through ICT and new media.You should not settle for prefabricated programs and information (Jaén, 2005).

According to the above, teaching in a physical environment and teaching virtually are qualitatively different, although the result has the same intention: student learning. They are two faces with their own dimensions in processes in which the teacher/facilitator presents himself in different facets. Different authors point out that they fall into the so-called digital competencies. For the authors Viñals & Cuenca (2016) they mean five dimensions:

1.Information. Its comprehensive management from identification and selection, to the evaluation of its purpose and the level of relevance for its objectives.

2.Communication. The technical and administrative sufficiency for the effective operation of the resources of virtual learning environments.

3.Content creation. The use of ICT tools and applications, such as videos and multimedia presentations, to create and renew the presentation of academic content, always respecting licenses and intellectual property.

4.Security. The knowledge and application of personal protection methods, data protection, digital identity protection and other dimensions of user security.

5.Problem resolution. It is decision making when needs or possible problems are identified. It has to do with digital resources, ways of addressing social differences, solving technical problems, etc.

Rizo-Rodríguez (2020) reviews different authors for whom he groups their interpretations of roles into four categories:

1.Pedagogical. The teacher facilitates the construction of knowledge beyond the master class. He contributes specialized knowledge, focuses the discussion, asks critical questions, and responds to participants’ contributions. His role is that of a mediator of the virtual learning environment.

2.Social. With skills to create a collaborative environment that generates a learning community.

3.Technique. Sufficient operational efficiency to guarantee the effectiveness of digital tools. Generates comfort and security in students.

4.Administrative. Know the computer applications that are used to enhance the collaborative environment and knowledge construction.

Similarly, Gordon (2022) points out aspects that must be taken into account by the teacher/facilitator:

1.Being a content provider that involves the development of teaching materials in different formats.

2.Tutoring action. More than a guiding teacher, a learning facilitator.

3.Evaluator role. Comprehensive learning of the students, the training process and their own performance.

4.Technical support. Providing for possible difficulties that students encounter in the development of the course.

5.These roles have individual and group repercussions and determine teaching behavior adapted to virtuality.

6.They are information consultants and, therefore, experienced seekers of information materials and resources.

7.They are collaborators of the group. They favor approaches in coworking spaces and problem solving through collaborative work in the different spaces, synchronous and asynchronous, of the virtual learning environment.

8.They are lone workers. Digital has more individual implications, since the possibilities of working from anywhere in your own workplace, that is, teleworking, are associated with processes of loneliness and isolation. In this sense, ubiquity also has its disadvantages.

9.They are facilitators of learning. Technological environments are focused more on learning how to learn than on classic forms of transmission of information and content.

The constant in specialized literature is the change of paradigms. Teachers have a role as knowledge facilitator that involves the use of an advanced, complex, multidimensional and changing tool, which requires not only technical skills but also positive attitudes in the face of permanent technological disruption.

On the other hand, the Resolution of July 1, 2022, of the General Directorate of Territorial Evaluation and Cooperation, which publishes the Agreement of the Education Sector Conference on the certification, accreditation and recognition of digital competence teacher, justifies through article 7 of Organic Law 2/2006, of May 3, on Consolidated Education, that educational Administrations may agree on the establishment of common criteria and objectives in order to improve the quality of the educational system and guarantee equity. The Education Conference will promote these types of agreements and will be informed of all those adopted. For all these reasons, there is an annex to the agreement that explains the certification, accreditation and recognition procedure for teaching digital competence, including the levels of teaching digital competence and reference to the teaching digital competence framework: A1, A2, B1, B2, C1 and C2. For information, the accreditation procedure for the C2 advanced performance level is shown in Table 1.

|

Accreditation procedure for advanced level C2. |

|

The C2 level may be accredited by areas, through the procedures determined by the educational Administrations, within the scope of their powers, from among the following: 1. Evaluation through performance observation: Passing an evaluation of level C2 of the current MRCDD through observation of performance following the public evaluation guide determined by the educational Administrations, within the scope of their powers. 2. Accreditation through a process of analysis and validation of evidence compatible with the C2 level indicators of the current MRCDD. They will be considered evidence and must be documented and specifically related to teaching digital competencies: – The nominal prizes awarded by the educational Administrations. – Publications with NIPO and/or ISBN, ISSN, DOI or URL. – Participation as a speaker in regional, national and international conferences. – Coordination and authorship of research and educational innovation projects. – Recognitions by meducational Administrations of having implemented significant improvements in the educational field. – Curriculum to evaluate the professional career. – As well as any other evidence that accredits the C2 level. |

Source: Annex I of the Resolution of July 1, 2022, of the General Directorate of Evaluation and Territorial Cooperation, which publishes the Agreement of the Education Sector Conference on the certification, accreditation and recognition of teaching digital competence.

2.2. The EduTOOL@ instrument

It is an instrument for assessing the quality offered for virtual courses, capable of analyzing and identifying the features of the didactic quality of teaching virtual courses from three dimensions (Baldomero & Salmerón, 2015). Said instrument, with a registered trademark in the Spanish Patent and Trademark Office (current file number: 3,087,298), is the result of a work of the Doctoral Thesis of Dr. D. Miguel Baldomero Ramírez Fernández entitled “Diffuse model of rules for the analysis and evaluation of MOOCs with the UNE 66181 (2012) standard for quality of virtual training”, with extraordinary doctorate award 2015/2016, awarded by the Pablo de Olavide University of Seville and developed in the Computational Intelligence Laboratory (LIC). This tool is developed under the auspices of the UNE 66181 standard: Virtual Training to virtual courses using fuzzy logic. For all of the above, this model provides the same weighted indicators of the previous regulatory standard UNE 66181:2012 for the quality of virtual training, which are also valid to supervise all aspects of any non-face-to-face teaching in non-university educational centers. Table 2 shows the weightings of the dimensions and subfactors of the instrument.

Table 2. Weightings of the dimensions and subfactors of the EduTOOL@ instrument.

|

Dimensions |

Dimension weights |

Subfactors |

Subfactor weights |

|

1. Recognitionof training |

9.51 |

1.1. Recognition of training for employability |

9.51 |

|

2.Learning methodology |

44.64 |

2.1. Teaching design |

10.45 |

|

2.2. Training resources and learning activities |

14.45 |

||

|

2.3. Tutorships |

9.13 |

||

|

2.4. Technological environment |

10.61 |

||

|

3. Accessibility levels |

45.85 |

3.1. Hardware accessibility |

13.49 |

|

3.2. Software accessibility |

14.06 |

||

|

3.3. Web accessibility |

18.25 |

Source: Baldomero & Salmeron (2015).

2.3. The SulodiTOOL@ instrument

This tool has 10 dimensions of assessment indicators in the supervision ofnon-face-to-face teaching in non-university educational centers. This instrument, with a registered trademark in the Spanish Patent and Trademark Office (file number in process: M4177803), distributes for 6 different scenarios in High Weight (PA), Medium Weight (PM) and Low Weight (PB), according to the non-university teaching and the asynchronous or synchronous modality, as shown in Table 3. There is also a quantitative and qualitative assessment model of the quality of non-face-to-face teaching and a report with the deficiencies and proposals for improvement of each dimension. by indicators. In this sense, each indicator is dichotomous (yes/no) and measures the clarity of the claims when at least 75 % of its performance is reached.

In Social Sciences, the design of instruments must meet two fundamental conditions for their application and validation: content validity and reliability. Thus, content validity is the efficiency with which an instrument measures what it is intended to measure (Chávez, 2004; Hurtado, 2010). That is, the degree to which an instrument reflects a specific content domain of what is being measured and, therefore, that the items chosen are truly indicative of what is to be measured (Hernández, Fernández & Baptista, 2010).

This research bases the validity of the content of the instrument on the bibliographic review carried out and the normative theoretical framework on which it is based (the UNE 66181:2012 standard and the LomLOE). In this sense, it is taken as a premise that this standard meets the attributes of an expert judgment, that is, it is considered an informed opinion of people with experience in the subject, who are recognized by others as qualified experts in this matter, and who They can provide information, evidence, judgments and evaluations (Escobar & Cuervo, 2008).

With respect to the reliability of the information collection instrument, a measurement is reliable or safe when applied repeatedly to the same individual or group, or at the same time by different researchers, it gives the same or similar results (Sánchez & Guarisma, 1995). In this same line of discourse, different authors indicate that the reliability of a measurement instrument refers to the degree to which its repeated application to the same individual or object produces equal results and to the accuracy of the data, in the sense of its stability, repeatability or precision (McMillan & Schumacher, 2010; Hernández, Fernández & Baptista, 2010). Thus, in this study the reliability of the tool is demonstrated by obtaining the same results when applied by different researchers and the use of scales free of deviations because each item is dichotomous.

Table 3. Weightings of the dimensions of the SulodiTOOL@ instrument.

|

Indicators |

Scenario 1 |

Scenario 2 |

Scenario 3 |

Scenario 4 |

Scenario 5 |

Scenario 6 |

|

1.Regulations and Procedures |

6.52 |

6.34 |

6.39 |

6.36 |

6.29 |

6.35 |

|

2.Teacher training |

10,12 |

9.79 |

9.86 |

10.05 |

9.80 |

10.32 |

|

3.Overall quality of content |

10.45 |

10.81 |

11.09 |

11.01 |

12.09 |

11.72 |

|

4.Training design and methodology |

13.05 |

12.69 |

12.77 |

12.72 |

12.59 |

12.69 |

|

5.Motivation and participation |

10.92 |

11.70 |

11.22 |

11.23 |

10.64 |

10.66 |

|

6.Learning materials |

10.54 |

10.68 |

11.59 |

11.23 |

11.84 |

11.78 |

|

7.Tutoring |

9.60 |

9.13 |

9.20 |

9.14 |

8.37 |

8.86 |

|

8.Collaborative learning |

8.50 |

8.46 |

7.86 |

8.45 |

7.63 |

8.00 |

|

9.Activities, tasks and feedback |

10.78 |

10.87 |

10.85 |

10.52 |

11.07 |

10.29 |

|

10.Formative evaluation |

9.52 |

9.53 |

9.17 |

9.28 |

9.67 |

9.32 |

|

Addition |

100 |

100 |

100 |

100 |

100 |

100 |

Source: self made.

3. Methodological Design.

The methodology consists of the application of a mixed research method. The objective is sustain the strengths of both methods (qualitative and quantitative) trying to make the data as rich as possible, hence our research is considered an intermediate between qualitative and quantitative (Cedeño-Viteri, 2012). The qualitative methodology has focused on the analysis and comparison of bibliographic documents on the assessment instruments EduTOOL@ and SulodiTOOL@, to subsequently continue with a quantitative methodology on the analyzes carried out with the weights of the indicators of both tools and their comparison with a graphic representation of areas of importance in the supervision of instrument indicators created by their weightings, and through the AutoCAD@ application.

4. Results.

Based on the analysis of the dimensions, subfactors and common indicators of the two instruments, as shown in Table 4, it can be seen that there are 4 dimensions and subfactors of the EduTOOL@ instrument that are common to 5 indicators of the SulodiTOOL@ instrument.

Table 4. Dimensions, subfactors and common indicators of the instruments.

|

Dimensions and subfactors EduTOOL@ |

Percentages |

Indicators SulodiTOOL@ |

Percent range |

|

2.1. Teaching design |

10.45 |

4.Training design andmethodology |

[12.59-13.05] |

|

2.2. Training resources and learning activities |

14.45 |

6. Learning materials |

[10.54-11.84] |

|

9. Activities, tasks and feedback |

[10.29-11.07] |

||

|

2.3. Tutorships |

9.13 |

7. Tutoring |

[8.37-9.60] |

|

3. Accessibility levels |

45.85 |

1. Regulations and Procedures |

[6.29-6.52] |

|

Addition |

79.88 |

[48.08-52.08] |

Source: self made.

However, the common dimensions and indicators of EduTOOL@ have an overall weight of almost 80 % and the common indicators of SulodiTOOL@ have 50 %. Therefore, the importance given to these indicators is 30 % greater in the first instrument, compared to the second.

If the analysis is more specific, it can be seen that “Teaching design” and “Tutoring” have a very similar weighting to “Training design and methodology” and “Tutoring”, respectively. This is followed by the subfactor of “Training resources and learning activities”, which is weighted 6 % lower than “learning materials” and “activities, tasks and feedback”. And finally, it can be seen that the “Accessibility levels” dimension is almost 39 % more important in the first instrument than “Regulations and Procedures” in the second.

Along these lines, looking at Table 5, it can be seen that there are 2 dimensions and subfactors of the EduTOOL@ instrument that are not common to the 5 indicators of the SulodiTOOL@ instrument. The non-common subfactors of EduTOOL@ have an overall weighting of 20 % and the non-common indicators of SulodiTOOL@ have 50 %. That is, the aspects to be assessed that are not common to both instruments may be 30 % more relevant in the second tool.

Table 5. Dimensions, subfactors and non-common indicators of the instruments.

|

Dimensions and subfactors EduTOOL@ |

Percentage s |

Indicators SulodiTOOL@ |

Percent range |

|

2. Teacher training |

[9.79-10.32] |

||

|

1.1. Recognition of training for employability |

9.51 |

3. Overall quality of content |

[10.45-12.09] |

|

2.4. Technological environment |

10.61 |

5. Motivation and participation |

[10.64-11.70] |

|

8. Collaborative learning |

[7.63-8.50] |

||

|

10. Formative evaluation |

[9.17-9.67] |

||

|

Addition |

20.12 |

100 |

[47.68-52.28] |

Source: self made.

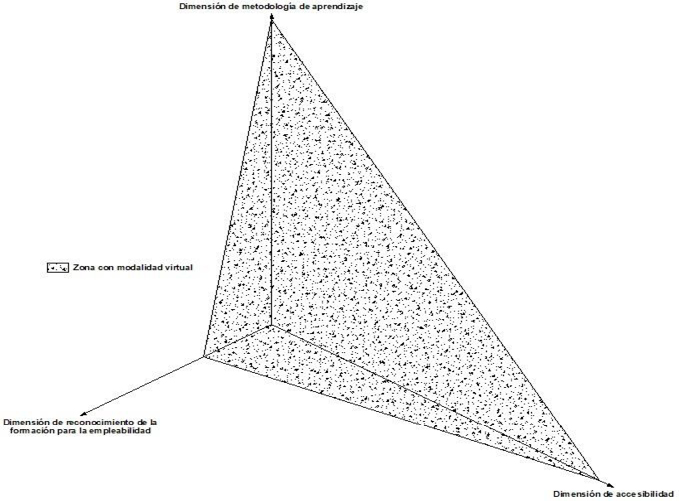

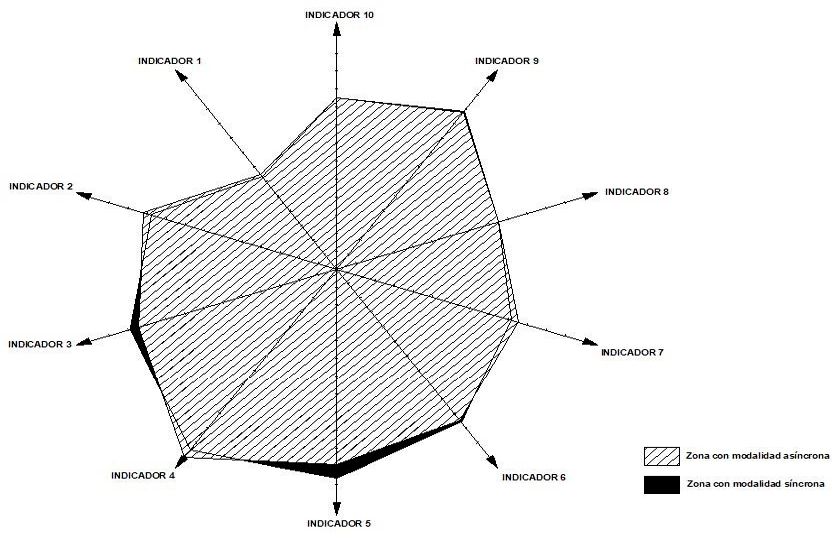

If the supervision with the instruments is focused on a graphic representation of areas of importance of the dimensions and indicators of both tools, created by the weights of said indicators, as shown in Figure 1 for the EduTOOL@ instrument and in Figure 2 for the SulodiTOOL@ instrument, tailored for Primary Education, in its 2 scenarios, synchronous and asynchronous modality.

Figure 1. Graphic representation of the area of importance in monitoring the dimensions of the EduTOOL® instrument.

Source: own elaboration through the AutoCAD® application.

Figure 2. Graphic representation of the area of importance in the supervision of the indicators of the SULODITOOL® instrument in Primary Education (scenario 1 and 2).

Source: own elaboration through the AutoCAD® application.

5. Conclusions and discussions.

In the scenario of virtual teaching caused by COVID-19, it is necessary to use tools to supervise the quality of non-university training in educational centers, both public and private. Along these lines, this research has made a comparison, both qualitative and quantitative, between two assessment instruments for non-face-to-face teaching.

If the supervision of the teachings is configured as a graphic representation of areas of importance designed by the weights of the indicators of both instruments, the conclusion is reached that the area created by the weights of the first instrument is lower than the area of the second instrument.

For all of the above, this study reaches the same conclusions as in other research, where it is evident that non-face-to-face courses have a solid pedagogical basis in their formats (Glance, Forsey & Riley, 2013). However, and although this training has emerged in a relevant way, it is evident that there is a lack of quantitative quality in terms of virtual training. For this, new research paths are necessary that open in an interdisciplinary manner nuclei of attention and reflection on their deficiencies in those indicators and dimensions analyzed in this research. In this line, and although there are research works that include comparative analysis of the main MOOC platforms and the construction and validation of an instrument for measuring the perception of MOOC quality (Baldomero, Salmerón & López, 2015; Bournissen, Tumino & Carrión, 2018), in any case, the assessment of the quality of this virtual training is on the research agenda for the future for efficient supervision. In this sense, the need for a greater number of studies on some quality indicators of virtual teaching is estimated, as well as longitudinal (Stödberg, 2012) or comparative studies (Balfour, 2013). And, more specifically, continue research to answer questions about methods that improve reliability, validity, authenticity and security of supervisors’ evaluations, or on techniques that provide effective automated assessment and immediate feedback systems; and how they can be integrated into open learning environments (Oncu & Cakir, 2011), to give more guarantee of usability to the quality tools that can be developed.

REFERENCES

(2013). The MOOCs revolution, a new education from the technological paradigm? Comunicar, 41, 7-8. https://doi.org/10.3916/C41-2013-a1

(2006). The research project: Introduction to scientific methodology. Episteme.

& (2015). An instrument for the evaluation and accreditation of the quality of MOOCs. [EduTool®: A tool for evaluating and accrediting the quality of MOOCs.]. Education XX1, 18(2), 97-123. https://doi.org/10.5944/educxx1.13233

, & (2015). Comparison between quality evaluation instruments for MOOC courses: ADECUR vs UNE 66181:2012 Standards. RUSC. Universities and Knowledge Society Journal, 12(1), 131-145. https://doi.org/10.7238/rusc.v12i1.2258

(2013). Assessing writing in MOOCs: Automated essay scoring and Calibrated Peer Review. Research & Practice in Assessment, 8 (1), 40-48.

, , & (2018). MOOC: quality assessment and measurement of perceived motivation. IJERI: International Journal of Educational Research and Innovation, 11, 18-32. https://www.upo.es/revistas/index.php/IJERI/article/view/2899

, & (2020). Design of a t-MOOC for training in digital teaching skills: study in development (DIPROMOOC Project). Innoeduca. International Journal of Technology and Educational Innovation, 6(1), 4-13. https://doi.org/10.24310/innoeduca 2020.v6i1.7507

(2012). Mixed research, a fundamental andragogical strategy to strengthen higher intellectual capacities. Revista Científica, 2(2), 17-36.

(2013). MOOCs as disruptive technologies: strategies to improve the learning experience and quality of MOOCs. Journal of Distance Education, 39, 1-17.

(2004). Introduction to educative research. Venezuela: Editorial Gráficas SA.

& (2008). Content validity and expert judgment: an approach to its use. Advances in Measurement, 6, 27-36.

(2022). Teaching skills for working in virtual learning communities. InterSedes, 23(48), 143–162. https://doi.org/10.15517/isucr.v23i48.49417

, , & (2013). The pedagogical foundations of massive open online courses. First Monday, 18(5), 1-12. https://doi.org/10.5210/fm.v18i5.4350

, & (2013). MOOC Design Principles. A Pedagogical Approach from the Learner’s Perspective. eLearning Papers, 33, 1-6. https://openaccess.uoc.edu/bitstream/10609/41681/1/In-depth_33_4 %282 %29.pdf

, & (2010). Investigation methodology. McGraw Hill.

& (2010). E-Learning Standards: Reference Guide. University of Alcalá. http://www.cc.uah.es/hilera/GuiaEstandares.pdf

(2010). Investigation methodology. Venezuela: Quirón Editorial. ISSN: 2386-4303

(2005). A study system for the infovirtual campus. In FUCN (Comp.), Virtual education: reflections and experiences. (pp. 37-50). Northern Catholic University Foundation. https://www.ucn.edu.co/institucion/sala-prensa/Documents/educacion-virtual- reflexiones-experiencias.pdf

, & (). Proposal for evaluating the quality of MOOCs based on the Afortic Guide. Campus Virtual, 2 (1), 124-132. http://www.revistacampusvirtuales.es/images/volIInum01/revista_campus_virtuales_01_ii- art10.pdf

& (2010). Research in education: Evidence-based Inquiry. Boston: Pearson Education, Inc.

Standard UNE 66181 (2012). Quality Management of Virtual Training .https://eqa.es/certificacion-sistemas/une-66181

Organic Law 2/2006, of May 3, on Consolidated Education. https://www.boe.es/buscar/pdf/2006/BOE-A-2006-7899-consolidado.pdf

, , & (2022). Indicators for enhancing learners’ engagement in massive open online courses: A systematic review. Computers and Education Open, 3, 100088. https://doi.org/10.1016/j.caeo.2022.100088

& (2011). Research in online learning environments: Priorities and methodologies. Computers & Education, 57 (1), 1098-1108. https://doi.org/10.1016/j.compedu.2010.12.009

Resolution of July 1, 2022, of the General Directorate of Territorial Evaluation and Cooperation, by which the Agreement of the Education Sector Conference on the certification, accreditation and recognition of teaching digital competence is published

. Role of the teacher and student in virtual education. Multi- Essays Journal, 6(12), 28-37. https://doi.org/10.5377/multiensayos.v6i12.10117

, & (2014). Evaluation of the pedagogical quality of MOOCs. Curriculum and Teacher Training, 18(1), 27-41, http://www.ugr.es/~recfpro/rev181ART2.pdf

(2005). Communication and pedagogy for the art of learning. In FUCN (Comp.), Virtual education: reflections and experiences (pp. 51-67). Northern Catholic University Foundation.

& (1995). Research Methods. Maracay. Bicentenary University of Aragua Editions.

(2012). A research review of e-assessment. Assessment and Evaluation in Higher Education, 37 (5), 591-604. https://doi.org/10.1080/02602938.2011.557496

& (2014). Evaluation of the pedagogical quality of MOOCs. Curriculum and Teacher Education, 18(1), 3-12. http://www.ugr.es/~recfpro/rev181ed.pdf

, , , & (2021). Innovative university practices on the educational advantages and disadvantages of MOOC environments. Distance Education Journal (RED), 21(66). https://doi.org/10.6018/red.422141

and (2016). The role of the teacher in the digital age. Interuniversity Journal of Teacher Training, 30(2), 103-114. https://recyt.fecyt.es/index.php/RIFOP/issue/view/2859/218

(2013). MOOCs, a critical vision and a complementary alternative. The individualization of learning and pedagogical help. Campus Virtual, 1(II), 20-38. http://uajournals.com/ojs/index.php/campusvirtuales/article/view/26