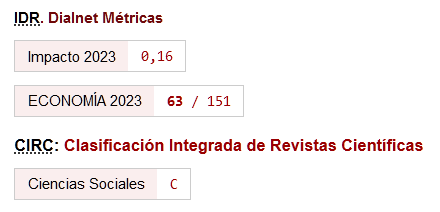

Predicción del nivel de cosecha de camarón blanco: el caso de una pequeña camaronera en la parroquia Tenguel del cantón Guayaquil, Ecuador

DOI:

https://doi.org/10.46661/revmetodoscuanteconempresa.3791Palabras clave:

predicción, cosecha, camarón blanco Litopenaeus vannamei, aprendizaje estadístico, validación cruzada, MARSResumen

Actualmente el sector camaronero del Ecuador es uno de los sectores no petroleros con mayor proyección de crecimiento hacia el mercado internacional. A pesar del auge de este sector, la mayoría de los pequeños productores de camarón toman sus decisiones operativas en función del conocimiento empírico del negocio, sin considerar datos históricos ni ninguna herramienta científica como fundamento de sus decisiones. En este trabajo implementamos y comparamos técnicas de aprendizaje estadístico de vanguardia para la predicción del nivel de cosecha de camarón blanco Litopenaeus vannamei de una pequeña camaronera ubicada en la parroquia Tenguel del cantón Guayaquil, Ecuador. Datos de 35 pescas que corresponden a 7 ciclos se usaron como datos. Luego se hicieron predicciones reales de cosecha para los dos siguientes ciclos. Las técnicas comparadas son: Regresión Lineal Múltiple (RLM) por mínimos cuadrados, Árbol de Regresión CART, Bosques Aleatorios, Regresión adaptativa multivariante por tramos (MARS) y Máquinas de Soporte Vectorial (SVM). MARS sin interacciones, el modelo de RLM aditivo con selección de predictores por Best Subset Selection y SVM con Núcleo lineal produjeron un menor error de predicción por Validación Cruzada. El buen rendimiento predictivo de estos métodos fue confirmado con buenos resultados de predicción real en los dos siguientes ciclos. El uso de técnicas estadísticas de vanguardia puede ser de gran ayuda para obtener predicciones confiables, y, por tanto, para mejorar los procesos operativos de las pequeñas camaroneras.

Descargas

Citas

Alvarado-Espinoza, F. (2016). La comercialización del camarón ecuatoriano en el mercado internacional y su incidencia en la generación de divisas. (Tesis de fin de Máster). Universidad de Guayaquil, Guayaquil.

Atkinson, A.C. (1985). Plots, Transformations, and Regression: An Introduction to Graphical Methods of Diagnostic Regression Analysis. Oxford: Clarendon Press.

Beale, E.M.L., Kendall, M.G., & Mann, D.W. (1967). The discarding of variables in multivariate analysis. Biometrika, 54(3/4), 357-366.

Boser, B.E., Guyon, I.M., & Vapnik, V.N. (1992). A training algorithm for optimal margin classifiers. Proceedings of the Fifth Annual Workshop on Computational Learning Theory COLT '92, 144-152.

Box, G.E.P., Jenkins, G.M., Reinsel, G.C., & Ljung, G.M. (2015). Time series analysis: Forecasting and control (5ta ed.). Hoboken, New Jersey: John Wiley & Sons.

Breiman, L. (1996). Bagging predictors. Machine Learning, 24, 123-140.

Breiman, L. (2001). Random forests. Machine Learning, 45, 5-32.

Breiman, L., Friedman, J., Olshen, R., & Stone, C. (1984). Classification and Regression Trees. Nueva York: Wadsworth & Brooks.

Cevallos-Valdiviezo, H., & Van Aelst, S. (2015). Tree-based prediction on incomplete data using imputation or surrogate decisions. Information Sciences, 311, 163-181.

Cohen, J. (1960). A coefficient of agreement for nominal scales. Educational and Psychological Measurement, 20(1), 37-46.

Cook, R.D. (1998). Regression Graphics: Ideas for Studying Regressions through Graphics. Nueva York: John Wiley & Sons.

Cook, R.D., & Weisberg, S. (1982). Residuals and Influence in Regression. Nueva York: Chapman & Hall.

Cortes, C., & Vapnik, V. (1995). Support-vector networks. Machine Learning, 20(3), 273-297.

David, F.N., & Neyman, J. (1938). Extension of the Markoff’s theorem on least squares. Statistical Research Memoirs, 2, 105-116.

Drews-Jr, P., Bauer, M., Machado, K., Puciarelli, P., & Felipe Dumont, L. (2014, octubre). A machine learning approach to predict the pink shrimp harvest in the patos lagoon estuary. KDMILE. Sao Carlos, Brasil.

Drucker, H., Burges, C.J.C., Kaufman, L., Smola, A.J., & Vapnik, V. (1997). Support vector regression machines. Advances in Neural Information Processing Systems, 28(7), 155-161.

FAO (2018). GLOBEFISH Highlights: A Quarterly Update on World Seafood Markets (1st issue). Descargado de http://www.fao.org/3/I8626EN/i8626en.pdf

Feelders, A. (1999). Handling missing data in trees: Surrogate splits or statistical imputation? Principles of Data Mining and Knowledge Discovery (pp. 329-334). Berlin Heidelberg: Springer.

Fox, J. (1984). Linear Statistical Models and Related Methods, with Applications to Social Research. Nueva York: John Wiley.

Friedman, J.H. (1991). Multivariate adaptive regression splines. Annals of Statistics, 19(1), 1-67.

Furnival, G.M., & Wilson, R.W. (1974). Regressions by leaps and bounds. Technometrics, 16(4), 499-511.

Garcia, S.P., DeLancey, L.B., Almeida, J., & Chapman, R. (2007). Ecoforecasting in real time for commercial fisheries: The Atlantic white shrimp as a case study. Marine Biology, 152, 15-24.

Geisser, S. (1993). Predictive Inference. Nueva York: Chapman & Hall.

Geman, S., Bienenstock, E., & Doursat, R. (1992). Neural networks and the bias/variance dilemma. Neural Computation, 4, 1-58.

Grant, W., Matis, J., & Miller, W. (1988). Forecasting commercial harvest of marine shrimp using a Markov chain model. Ecological Modelling, 43(3), 183-193.

Green, M., & Ohlsson, M. (2007, julio). Comparison of standard resampling methods for performance estimation of artificial neural network ensembles. Third International Conference on Computational Intelligence in Medicine and Healthcare. Plymouth, Reino Unido.

Göndör, M., & Bresfelean V. (2012). RepTree and M5P for measuring fiscal policy influences on the Romanian capital market during 2003-2010. International Journal of Mathematics and Computers in Stimulation, 4, 378-386.

Hastie, T., Tibshirani, R., & Friedman, J.H. (2001). The Elements of Statistical Learning. Nueva York: Springer.

Hocking, R. R., & Leslie, R. N. (1967). Selection of the best subset in regression analysis. Technometrics, 9(4), 531-540.

Hoerl, A.E., & Kennard, R.W. (1970). Ridge regression: Biased estimation for nonorthogonal problems. Technometrics, 12(1), 55-67.

Holte, R.C. (1993). Very simple classification rules perform well on most commonly used datasets. Machine Learning, 11(1), 63-90.

James, G., Witten, D., Hastie, T., & Tibshirani, R. (2014). An Introduction to Statistical Learning: With Applications in R. Nueva York: Springer.

Kalekar, P. S. (2004). Time series Forecasting using Holt-Winters Exponential Smoothing. Kanwal Rekhi School of Information Technology. Descargado de https://caohock24.files.wordpress.com/2012/11/04329008_exponentialsmoothing.pdf

Karatzoglou, A., Smola, A., Hornik, K., & Zeileis, A. (2004). kernlab - an S4 package for kernel methods in R. Journal of Statistical Software, 11(9), 1-20.

Kohavi, R. (1995). The power of decision tables. European Conference on Machine Learning (ECML), 174-189.

Kuncheva, L. I. (2014). Combining Pattern Classifiers: Methods and Algorithms (2da ed.). Nueva York: John Wiley.

Kutner, M., Nachtsheim, C., Neter, J., & Li, W. (2004). Applied Linear Statistical Models. Chicago: McGraw-Hill.

Lachenbruch, P.A., & Mickey, M.R. (1968). Estimation of error rates in discriminant analysis. Technometrics, 10(1), 1-11.

Liaw, A., & Wiener, M. (2002). Classification and regression by randomforest. R News, 2(3), 18-22.

Louppe, G. (2014). Understanding Random Forests: From Theory to Practice. (Tesis doctoral no publicada). Universidad de Lieja, Lieja.

Lumley, T., & Miller, A. (2017). leaps: Regression Subset Selection. R package version 3.0.

Marquardt, D.W. (1970). Generalized inverses, ridge regression, biased linear estimation, and nonlinear estimation. Technometrics, 12(3), 591-612.

McLachlan, G. (1992). Discriminant analysis and statistical pattern recognition. Nueva York: John Wiley.

Milborrow, S., Hastie, T., Tibshirani, R., Miller, A., & Lumley, T. (2018). earth: Multivariate Adaptive Regression Splines. R package version 4.6.3.

Molinaro, A.M., Simon, R., & Pfeiffer, R.M. (2005). Prediction error estimation: a comparison of resampling methods. Bioinformatics, 21(15), 3301-3307.

Mundfrom, D., Smith, M., & Kay, L. (2018). The effect of multicollinearity on prediction in regression models. General Linear Model Journal, 44, 24-28.

Nicovita (1997). Interrelaciones de la temperatura, oxígeno y amoniaco tóxico en el cultivo de camarón en Tumbes. Descargado de https://www.nicovita.com.pe/extranet/Boletines/ago_97_02.pdf

Peña, E.A., & Slate, E. H. (2006). Global validation of linear model assumptions. Journal of the American Statistical Association, 101(473), 341-354.

Plackett, R. L. (1949). A historical note on the method of least squares. Biometrika, 36(3/4), 458-460.

R Core Team. (2018). R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing.

Salmerón-Gómez, R., & Rodríguez-Martínez, E. (2017). Métodos cuantitativos para un modelo de regresión lineal con multicolinealidad. Aplicación a rendimientos de letras del tesoro. Revista de Métodos Cuantitativos para la Economía y la Empresa, 24, 169-189.

Santillán-Lara, X. (2018). La acuacultura del camarón y su impacto sobre el ecosistema de manglar. SPINCAM 3. Descargado de http://www.spincam3.net/data/actividades/2018/marzo/INFORME_TALLER_ECOSISTEMAS_USO_PRESIONES_28MAR2018.pdf

Seal, H.L. (1967). Studies in the history of probability and statistics. xv: The historical development of the gauss linear model. Biometrika, 54(1/2), 1-24.

Stigler, S.M. (1981). Gauss and the invention of least squares. The Annals of Statistics, 9(3), 465-474.

Sujjaviriyasup, T., & Pitiruek, K. (2013). Agricultural product forecasting using machine learning approach. International Journal of Mathematical Analysis, 7(38), 1869-1875.

Theil, H. (1971). Principles of Econometrics. Nueva York: John Wiley.

Therneau, T., & Atkinson, B. (2018). rpart: Recursive Partitioning and Regression Trees. R package version 4.1-13.

Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B (Methodological), 58(1), 267-288.

Vilalta, R., & Drissi, Y. (2002). A perspective view and survey of meta-learning. Artificial Intelligence Review, 18(2), 77-95.

Wahba, G. (1990). Spline Models for Observational Data. Montpelier: Capital City Press.

Publicado

Cómo citar

Número

Sección

Licencia

Derechos de autor 2020 Revista de Métodos Cuantitativos para la Economía y la Empresa

Esta obra está bajo una licencia internacional Creative Commons Atribución-CompartirIgual 4.0.

El envío de un manuscrito a la Revista supone que el trabajo no ha sido publicado anteriormente (excepto en la forma de un abstract o como parte de una tesis), que no está bajo consideración para su publicación en ninguna otra revista o editorial y que, en caso de aceptación, los autores están conforme con la transferencia automática del copyright a la Revista para su publicación y difusión. Los autores retendrán los derechos de autor para usar y compartir su artículo con un uso personal, institucional o con fines docentes; igualmente retiene los derechos de patente, de marca registrada (en caso de que sean aplicables) o derechos morales de autor (incluyendo los datos de investigación).

Los artículos publicados en la Revista están sujetos a la licencia Creative Commons CC-BY-SA de tipo Reconocimiento-CompartirIgual. Se permite el uso comercial de la obra, reconociendo su autoría, y de las posibles obras derivadas, la distribución de las cuales se debe hacer con una licencia igual a la que regula la obra original.

Hasta el volumen 21 se ha estado empleando la versión de licencia CC-BY-SA 3.0 ES y se ha comenzado a usar la versión CC-BY-SA 4.0 desde el volumen 22.